Caching Data for LHC Analysis

LHC

Significant portions of LHC analysis use the same datasets, running over each dataset several times. Hence, we can utilize cache-based approaches as an opportunity to efficiency of CPU use (via reduced latency) and network (reduce WAN traffic). We are investigating the use of regional caches to store, on-demand, certain datasets relevant to analysis use cases. The aim of the caches are to speed up overall analysis and reduce overall network resource consumption – both of which are predicted to be significant challenges in the HL-LHC era as data volumes and event counts increase.

In Southern California the UCSD CMS Tier-2 and Caltech CMS Tier-2 joined forces to create and maintain a regional cache, commonly referred as the “CMS SoCal cache”, that benefits all Southern California CMS researchers. The SoCal cache was augmented by a joint project with ESNet, which integrated a caching server into the SoCal Cache. The server is deployed on the ESnet point of presence at Sunnyvale, CA but is managed by staff at UCSD through the PRP project’s Kubernetes-based Nautilus cluster.

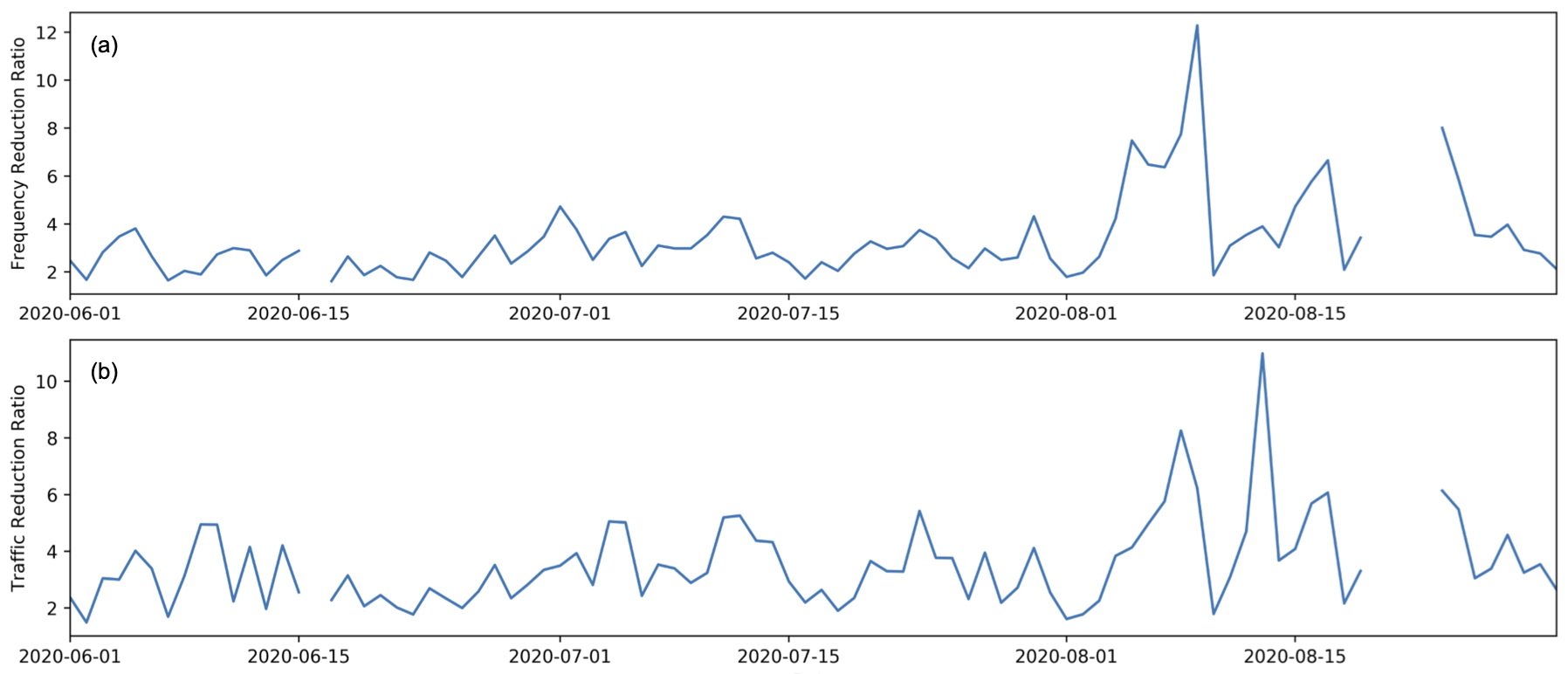

A recent ESNet study was carried out on the network savings of the SoCal cache. The study analyzed the XRootD monitoring records from the XCache servers and showed a factor 3 reduction of network bandwidth over the analyzed period.

Network utilization savings

Network utilization reduction ratio in terms of (a)number of accesses and (b) volume transferred.

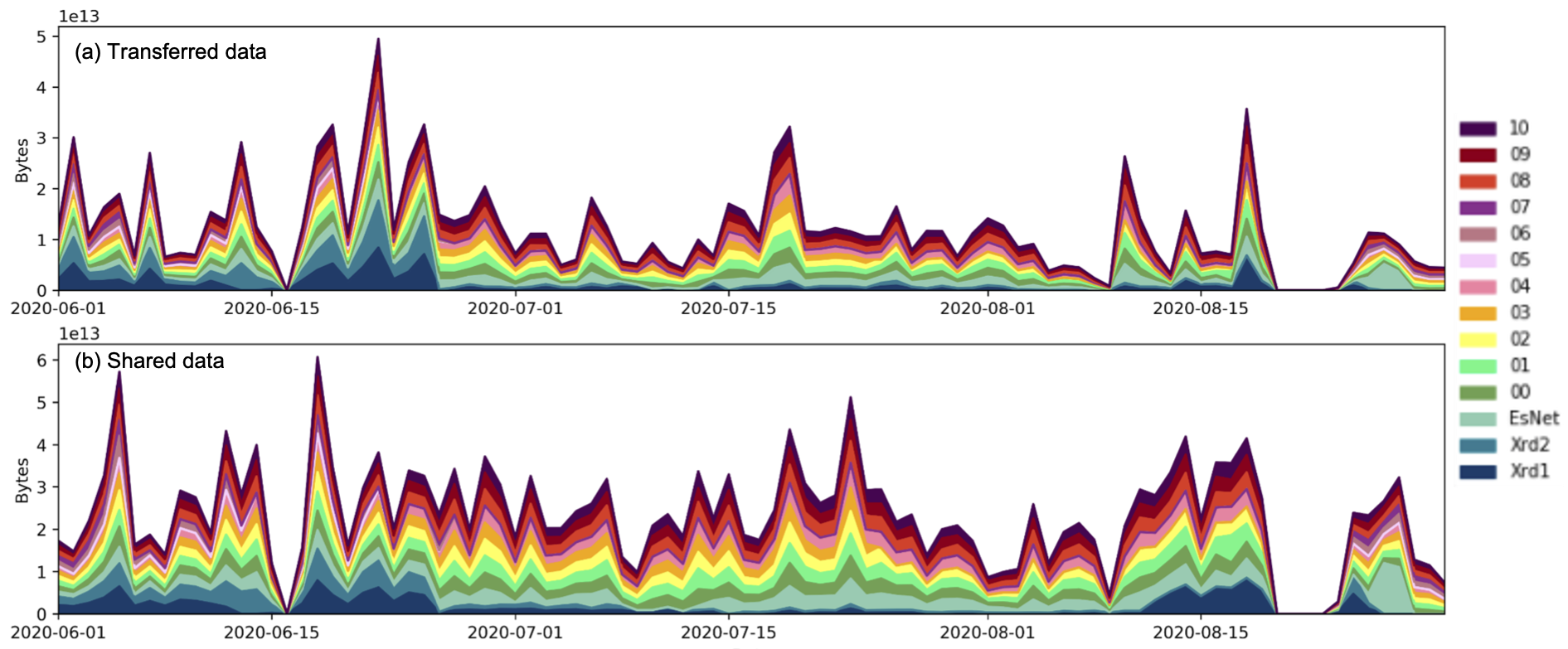

The aforementioned study also demonstrated how the accesses to the cache are evenly distributed among the different servers that conform the SoCal cache.

Misses(a) and Hits(b) distribution in SoCal cache

The above shows the distribution of hits and misses among servers in the SoCal cache.

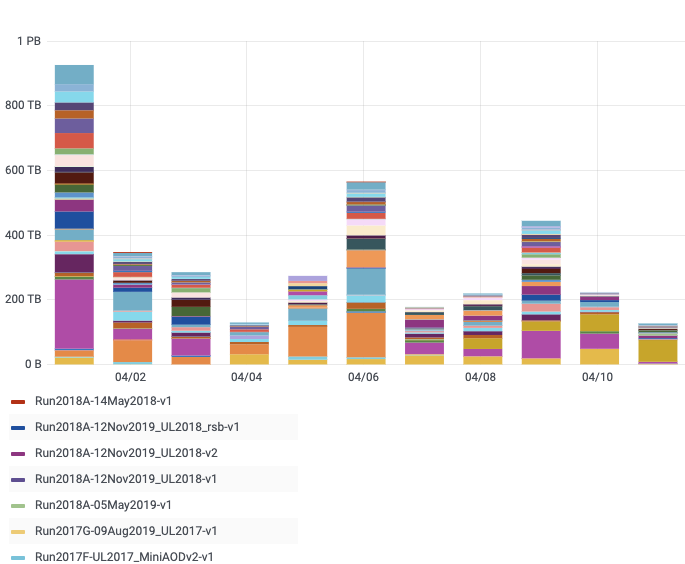

The IRIS-HEP team engaged with CMS to have a monitoring page showing the popularity of the analyzed data in the SoCal cache, which provides guidance on the evolution of the popularity of files in the namespace.

CMS data popularity

The above shows the distribution of accesses in terms of volume of the CMS analysis tasks by data campaign.

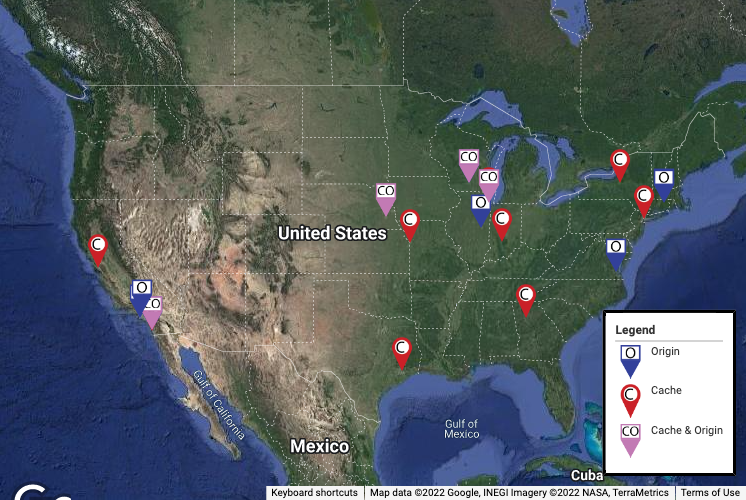

Open Science Data Federation (OSDF)

Similarly to the LHC experiments the OSG has deployed a set of caches and origins that serve both public and authenticated data from diverse experiments and individual researchers. In the following image we can see the location of the different origins and caches conforming the federation

Open Science Data Federation

Location of the different caches and origins within the OSDF.

For more information on how to joing the OSDF please visit the following link

Monitoring improvements

During the past couple of years a significant amount of effor was dedicated to understand and improve the issues affecting the collection

of the XRootD monitoring data. A first study: XrootD Monitoring Validation done in order to understand the data loss,

found that the cause was a common UDP issue known as “UDP packet fragmentation”.

The second study: XRootD Monitoring Scale Validation was carried out to find the limitations of the monitoring collector

when used at a a higher scale.

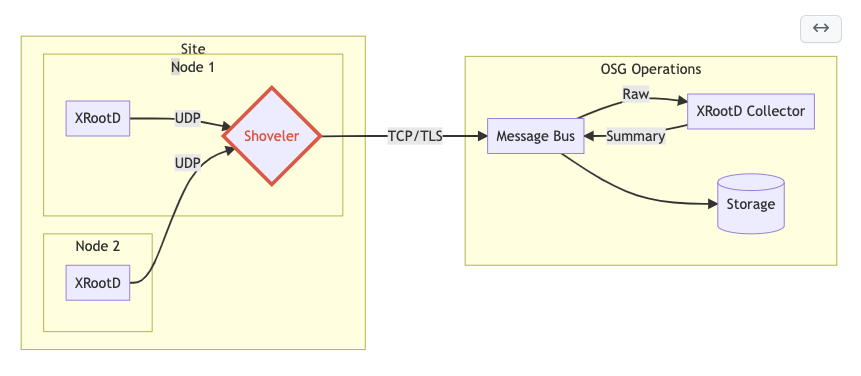

As a result of the first study mentioned above a new component called The shoveler was introduced in the

monitoring infrastructure to prevent the data loss due to UDP packet fragmentation. As depicted in the next figure, this lightweight component uses a secure and reliable channel to communicate

the monitoring data from the XRootD servers to the central monitoring collector operated by OSG.

The shoveler

The shoveler is deployed in between the XRootD server(s) and the XRootD Collector to ensure a reliable channel.

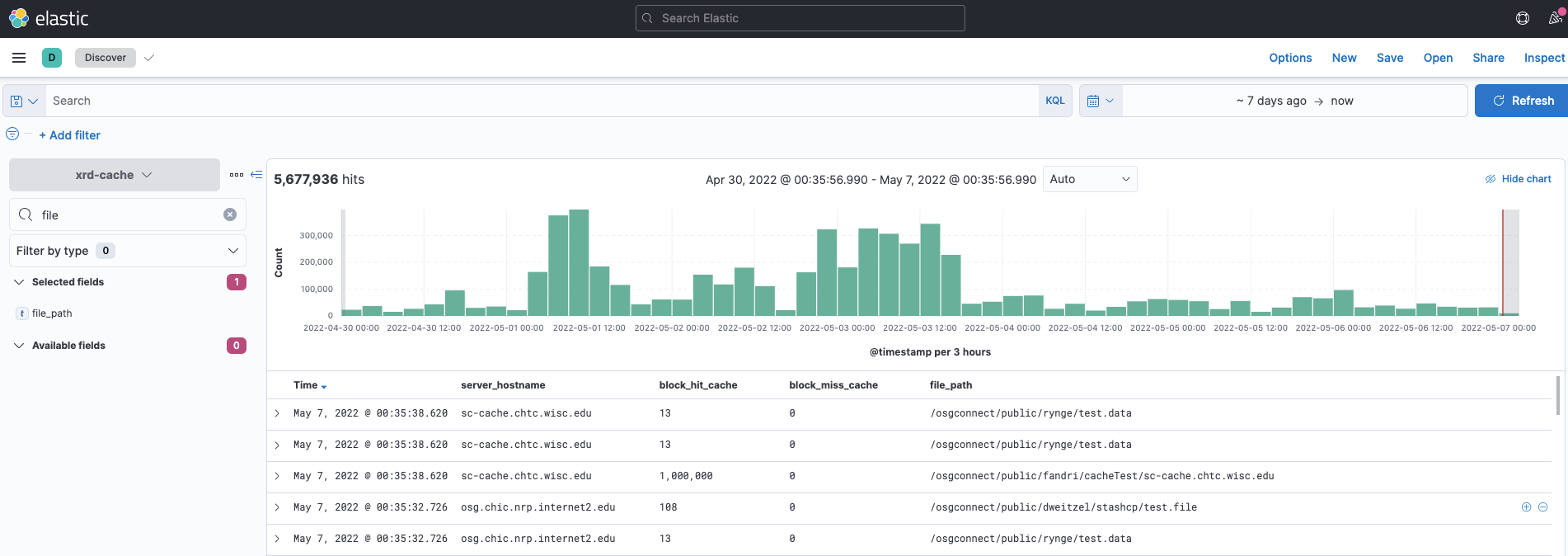

Finally, software improvements to the OSG collector have enabled us to start collecting and anlazying g-stream data, which is the XRootD monitoring stream that includes cache specific events. In the figure below we can observe an example of the g-stream data being collected form the caches in the OSDF.

XRootD g-stream monitoring data

An example of the g-stream data collected from the caches in the OSDF.

Repositories

Currently XCache is distributed by the OSG Software Stack (authored by the OSG-LHC area) both in the form of native packages for RedHat Enterprise Linux (RPMs) and container (Docker) images. Repositories of note include:

Reports

- Report on cache usage on the WLCG and potential use cases and deployment scenarios for the US LHC facilities

- XrootD Monitoring Validation

- XRootD Monitoring Scale Validation

Documentation

- How to join the Open Science Data Federation

- OSG Documentation on cache deployment (System administrator documentation)

- Report on LHC data access patterns, data uses, and intelligent caching approaches for the HL-LHC

Team

- Brian Bockelman

- Igor Sfiligoi

- Frank Wuerthwein

- Matevz Tadel

- Diego Davila

Presentations

- 30 Mar 2023 - "XCache Developments & Plans", Matevz Tadel, XRootD and FTS Workshop

- 25 Feb 2021 - "GeoIP HTTPS Redirector", Edgar Fajardo, XCache DevOps Meeting

- 15 Sep 2020 - "Data lake prototyping for US CMS", Edgar Fajardo, DOMA / ACCESS Meeting

- 4 Sep 2020 - "A US Data Lake", Edgar Fajardo, OSG All Hands Meeting (US ATLAS/CMS Combined session)

- 2 Sep 2020 - "Stashcache: CDN for Science", Edgar Fajardo, OSG All Hands Meeting

- 23 Apr 2020 - "How CMS user jobs use the caches", Edgar Fajardo, XCache DevOps SPECIAL

- 22 Apr 2020 - "XRootD Transfer Accounting Validation Plan", Diego Davila, S&C Blueprint Meeting

- 27 Feb 2020 - "XCache", Edgar Fajardo, IRIS-HEP Poster Session

- 5 Nov 2019 - "Creating a content delivery network for general science on the backbone of the Internet using xcaches.", Igor Sfiligoi, CHEP 2019

- 5 Nov 2019 - "Creating a content delivery network for general science on the backbone of the Internet using xcaches.", Edgar Fajardo, CHEP 2019

- 5 Nov 2019 - "Moving the California distributed CMS xcache from bare metal into containers using Kubernetes", Edgar Fajardo, CHEP 2019

- 12 Sep 2019 - "OSG XCache Discussion", Frank Wuerthwein, IRIS-HEP retreat

- 31 Jul 2019 - "CMS XCache Monitoring Dashboard", Diego Davila, OSG Area Coordination

- 8 Jul 2019 - "XCache Initiatives and Experiences", Frank Wuerthwein, pre-GDB meeting on XCache

- 11 Jun 2019 - "XCache Packaging", Brian Lin, XRootD Workshop

- 20 Mar 2019 - "Data Access in DOMA", Frank Wuerthwein, HOW2019 (Joint HSF/OSG/WLCG Workshop)

- 7 Mar 2019 - "The OSG Data Federation", Frank Wuerthwein, Internet2 Global Summit 2019

- 16 Jan 2019 - "OSG Cache on Internet Backbone developments", Edgar Fajardo, GDB Jan 2019

- 12 Dec 2018 - "OSG-LHC and XCache", Brian Lin, ATLAS Software & Computing Week \#61

- 2 Oct 2018 - "Current production use of caching for CMS in Southern California", Edgar Fajardo, DOMA / ACCESS Meeting

Publications

- Predicting Future Accesses in XRootD Caching Systems using ML-Based Network Pattern Analysis, S. S. Barla, V. S. S. L. Karanam, B. Ramamurthy, and D. Weitzel, “Predicting Future Accesses in XRootD Caching Systems using ML-Based Network Pattern Analysis,” in 2024 IEEE International Conference on Advanced Networks and Telecommunications Systems (ANTS), Dec. 2024, pp. 1–6. doi: 10.1109/ANTS63515.2024.10898672. (01 Dec 2024).

- Analyzing Transatlantic Network Traffic over Scientific Data Caches, Ziyue Deng, Alex Sim, Kesheng Wu, Chin Guok, Inder Monga, Fabio Andrijauskas, Frank Wuerthwein, and Derek Weitzel. Analyzing Transatlantic Network Traffic over Scientific Data Caches. in ACM 6th International Workshop on System and Network Telemetry and Analytics (SNTA'23) (20 Jun 2023).

- Effectiveness and predictability of in-network storage cache for Scientific Workflows, Caitlin Sim, Kesheng Wu, Alex Sim, Inder Monga, Chin Guok, Frank Würthwein, Diego Davila, Harvey Newman, and Justas Balcas. 2023 (22 Feb 2023).

- Access Trends of In-network Cache for Scientific Data, Ruize Han, Alex Sim, Kesheng Wu, Inder Monga, Chin Guok, Frank Würthwein, Diego Davila, Justas Balcas, Harvey Newman. 2022 (11 May 2022).

- Studying Scientific Data Lifecycle in On-demand Distributed Storage Caches, Julian Bellavita, Alex Sim, Kesheng Wu, Inder Monga, Chin Guok, Frank Würthwein, Diego Davila. 2022 (11 May 2022).

- Analyzing scientific data sharing patterns for in-network data caching, Elizabeth Copps, Huiyi Zhang, Alex Sim, Kesheng Wu, Inder Monga, Chin Guok, Frank Würthwein, Diego Davila, and Edgar Fajardo. 2021 (21 Jun 2021).

- Creating a content delivery network for general science on the internet backbone using XCaches, Edgar Fajardo and Marian Zvada and Derek Weitzel and Mats Rynge and John Hicks and Mat Selmeci and Brian Lin and Pascal Paschos and Brian Bockelman and Igor Sfiligoi and Andrew Hanushevsky and Frank Würthwein, arXiv:2007.01408 [cs.DC] (Submitted to CHEP 2019) (08 Nov 2019).