Accelerators and ML for reconstruction

This project has ended within the context of IRIS-HEP. Related work continues in a number of projects including IAIFI and A3D3.

Machine learning has become an extremely popular solution for a broad range of problems in high energy physics, from jet-tagging to signal extraction. While the application of machine learning can offer unrivaled performance, its use can also be expensive from both a latency and a computation perspective. Out of the machine learning revolution new processor technologies have emerged, which lead to large algorithmic speedups. These new processors include GPUs, and Field Programmable Gate Arrays (FPGAs). Both GPUs, and FPGAs have different advantages. GPUs yield very large speed ups in algorithmic throughput. Whereas, FPGAs yield very large single algorithmic inference speed ups. The speed-ups presented through both these new processor technologies are remarkable, and it is the goal of this project to demonstrate the effectiveness of this new processor technologies within realistic workflows at the LHC and eventually to deploy them. The strategy of this project is to find specific algorithms that take long latencies and replace them with machine learning algorithm that is significantly faster. The focus so far of this work has been on the reconstruction of the CMS Hadronic calorimeter.

This work consists of both the further development of the hls4ml tool (J. Duarte et al. 2018) as well as the study of applications for fast inference on FPGAs and GPUs. Ongoing developments of the hls4ml tool itself include wider support for neural network layer architectures and machine learning libraries, and improvements to the performance of the tools for large networks. Ongoing studies on the applications of machine learning on FPGAs include their use for particle tracking, calorimeter reconstruction, and particle identification.

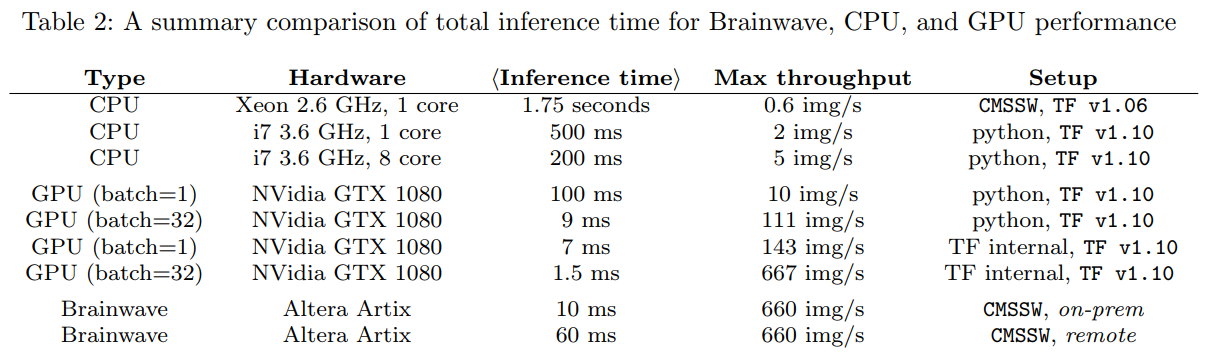

This work also involves studies of large-scale heterogeneous computing using Brainwave at Microsoft Azure, Google Cloud Platform (GCP), and Amazon Web Services (AWS). Brainwave and GCP have both been used in studying the capabilities of cloud-based heterogeneous computing (J. Duarte et al. 2019), which appears extremely promising as an option for low-cost and low-latency inference acceleration (see table below). AWS has been used extensively for prototyping applications in heterogeneous environments, and will also be used to investigate alternative models for cloud-based heterogeneous computing.

Out of this work has emerged the SONIC framework. This framework exploits asynchronous scheduling from IntelTBB to simultaneously run CMS reconstruction, and remote neural network algorithms at the LHC. In particular, we have now developed an Hcal reconstruction algorithm, which yields improved Hcal reconstruction, MET/Jet performance, and can be run within SONIC. We observe a 10% reduction in the operation of the CMS HLT reconstruction, and we have constructed a set of guidelines that we can use for deploying as-a-service computing at the LHC.

Team

- Daniel Craik

- Mark Neubauer

- Philip Harris

- Dylan Rankin

Presentations

- 1 Mar 2021 - "Quick and Quirk with Quarks", Philip Harris, IAIFI colloquium

- 1 Mar 2021 - "Scientific Applications of FPGAs at the LHC", Philip Harris, FPGA' 21

- 9 Sep 2020 - "FPGAs-as-a-Service Toolkit (FaaST)", Dylan Rankin, H2RC'20 (SC20)

- 17 Nov 2019 - "ML Acceleration with Heterogeneous computing for big data Physics", Philip Harris, SC19, H2RC

- 19 Oct 2019 - "FPGA ML inference as a service on AWS", Markus Atkinson, FastML Co-processors Meeting

- 13 Feb 2019 - "Using ML on FPGAs to enhance reconstruction output", Dylan Rankin, IRIS-HEP Topical Meeting

Publications

- Progress towards an improved particle flow algorithm at CMS with machine learning, F. Mokhtar, J. Pata, J. Duarte, E. Wulff, M. Pierini and J. Vlimant, arXiv 2303.17657 (30 Mar 2023) [13 citations].

- Explaining machine-learned particle-flow reconstruction, F. Mokhtar, R. Kansal, D. Diaz, J. Duarte, J. Pata, M. Pierini and J. Vlimant, arXiv 2111.12840 (24 Nov 2021) [12 citations].

- Applications and Techniques for Fast Machine Learning in Science, A. Deiana et. al., Front.Big Data 5 787421 (2022) (25 Oct 2021) [42 citations] [NSF PAR].

- FPGAs-as-a-Service Toolkit (FaaST), D. Rankin et. al., arXiv 2010.08556 (16 Oct 2020) [20 citations].

- FPGAs-as-a-Service Toolkit (FaaST), D. Rankin et. al., arXiv 2010.08556 (16 Oct 2020) [20 citations].

- GPU-Accelerated Machine Learning Inference as a Service for Computing in Neutrino Experiments, M. Wang, T. Yang, M. Acosta Flechas, P. Harris, B. Hawks, B. Holzman, K. Knoepfel, J. Krupa, K. Pedro and N. Tran, Front.Big Data 3 604083 (2021) (09 Sep 2020) [26 citations].

- GPU coprocessors as a service for deep learning inference in high energy physics, J. Krupa et. al., Mach.Learn.Sci.Tech. 2 035005 (2021) (20 Jul 2020) [31 citations] [NSF PAR].

- FPGA-accelerated machine learning inference as a service for particle physics computing, J. Duarte et. al., Comput.Softw.Big Sci. 3 13 (2019) (18 Apr 2019) [36 citations] [NSF PAR].