OSG Operations

The OSG Operations team is responsible for deploying, configuring, and running the OSG-owned services that contribute to the overall OSG fabric of services.

OSG-LHC makes a modest contribution to this overall operations team, focused on the LHC needs. We note that all the OSG Operations services, including the contributions from OSG-LHC, are used by the wider community of all of open science that benefits from OSG. The operations project of OSG-LHC is thus a direct benefit to the US LHC Operations programs and the wider open science community.

Activities

-

GRACC accounting system maintenance: The team operates the GRACC accounting system that tracks usage for all projects that use OSG software or services.

-

CVMFS infrastructure maintenance: The heterogeneity of OSG comes with considerable challenges to the applications running in this environment. To address these challenges, CERN developed a product, CVMFS, for the LHC community that allows curation of a uniform runtime environment across all compute resources globally. OSG has adopted this approach to support all of open science. OSG-LHC operates part of the infrastructure necessary to achieve this.

-

Open Science Data Federation (OSDF) maintenance: The team operates the OSDF infrastructure for staging and caching data for access across the OSG

-

Operation of other services: The team also operates various services such as the OSG software repositories, the Open Science Pool, the GlideinWMS metascheduling system, compute Access Points to support individual scientists across all domains of open science, and user and systems administrator facing web pages.

Accomplishments

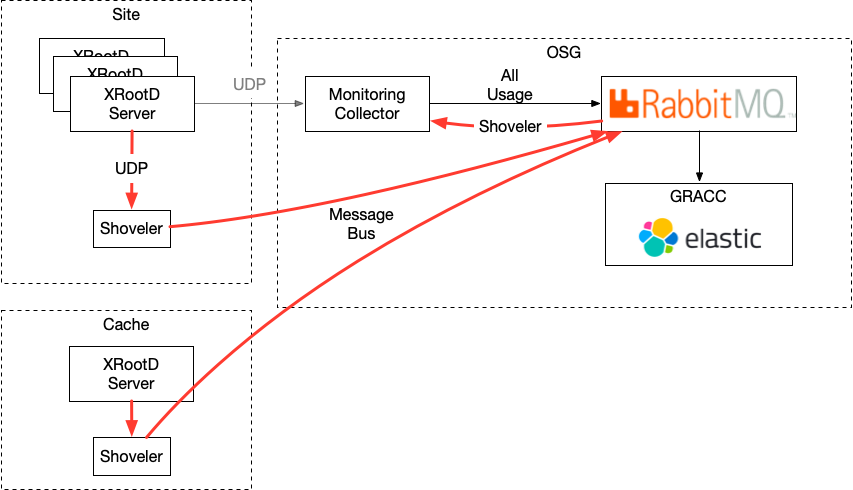

- XRootD Monitoring Shoveler: OSG-LHC operations provides infrastructure for the researcher to enable data transfer to their workflows on compute resources. XRootD is the underlying technology to facilitate this. In order to track data access in the accounting system, accounting information must be collected from all of the XRootD servers. By performing scale tests, the team has found instability in the UDP-based data access metric collection native to XRootD. Details of these tests can be found in the reports section below. To aid in the collection of XRootD monitoring packets, the OSG operations team and DOMA developed the XRootD Monitoring Shoveler. The architecture of the shoveler is shown in Figure 1. The shoveler makes the metric passing more resilient to packet loss by leveraging the OSG message bus. It does this by repackaging the raw UDP packets on the server and then sending them out through more stable TCP stream connections to the bus. Ultimately the shoveler is responsible for ensuring data access accounting data is collected in a reliable way.

-

Service Level Agreements and Service Monitoring: The Operations team has created Service Level Agreements for all OSG operated services. In the SLAs, the team has defined what “availability” means for each service type, and an associated target percentage. In addition, the team has implemented an internal monitoring system to measure, report on, and ensure the declared availability is met for each service.

-

Adoption of Container Orchestration: OSG has containerized and migrated most of its services into a Kubernetes based deployment model. This work has improved the quality of service by standardizing and unifying the configuration and installation of services. Further benefits include decoupling specific service instances from their respective data centers. Multiple services are now running out of two data centers, one based in the University of Wisconsin-Madison, and the other in the University of Chicago. The separate locations provide redundancy to improve fault tolerance and increase service uptimes.

GRACC

GRACC is the OSG accounting system that tracks usage for all projects that use OSG software or services. Within OSG-LHC in particular, and IRIS-HEP more generally, it provides the following functionality:

-

Accounting of US LHC Commitments to WLCG: The US LHC Operations programs make annual pledges of compute and storage resources to the WLCG. The actually provided resources are tracked by WLCG, and released in a monthly report by WLCG to the collaborations and funding agencies worldwide. OSG-LHC is responsible for delivering accurate accounting records for the US LHC Operations program resources, including potential HPC allocations, and other resources to WLCG. This includes the entire chain form the sites to WLCG. OSG-LHC provides the software, documentation, and training for site administrators so the latter can deploy the appropriate software to collect accounting records at their site. OSG-LHC works with US LHC Operations program management and WLCG to resolve any potential problems in the accounting. This is an essential service for the US LHC Operations programs to fulfill their MoU commitments to WLCG.

-

Accounting/Monitoring Infrastructure for XCache deployments:

OSG-LHC collaborates with the XRootD software team and DOMA in IRIS-HEP on providing data access monitoring and accounting information from the XRootD infrastructure deployed by the US LHC Operations programs to the CERN Monit infrastructure, a data analytics infrastructure located at CERN. The records traverse the OSG Operations supported infrastructure to its RabbitMQ bus, and from there to Monit. While we initially focus on data access records to XCache, we see that as the desired architecture for all XRootD services, and OSG operations works with DOMA, the XRootD team, and the US LHC Operations programs on transitioning towards this vision. See the reports listed below for more details on this activity.

-

Accounting/Monitoring Infrastructure for Network Performance: The OSG Network Monitoring project depends on this accounting infrastructure to provide the collection, and maintenance of network performance records. The architecture of the accounting system includes a commercially operated RabbitMQ message bus as central point where all accounting records transit through. This bus has multiple information consumers, including services at CERN to retrieve network performance records for all of WLCG. OSG-LHC thus provides network performance data to CERN as a service.

Architecture of GRACC

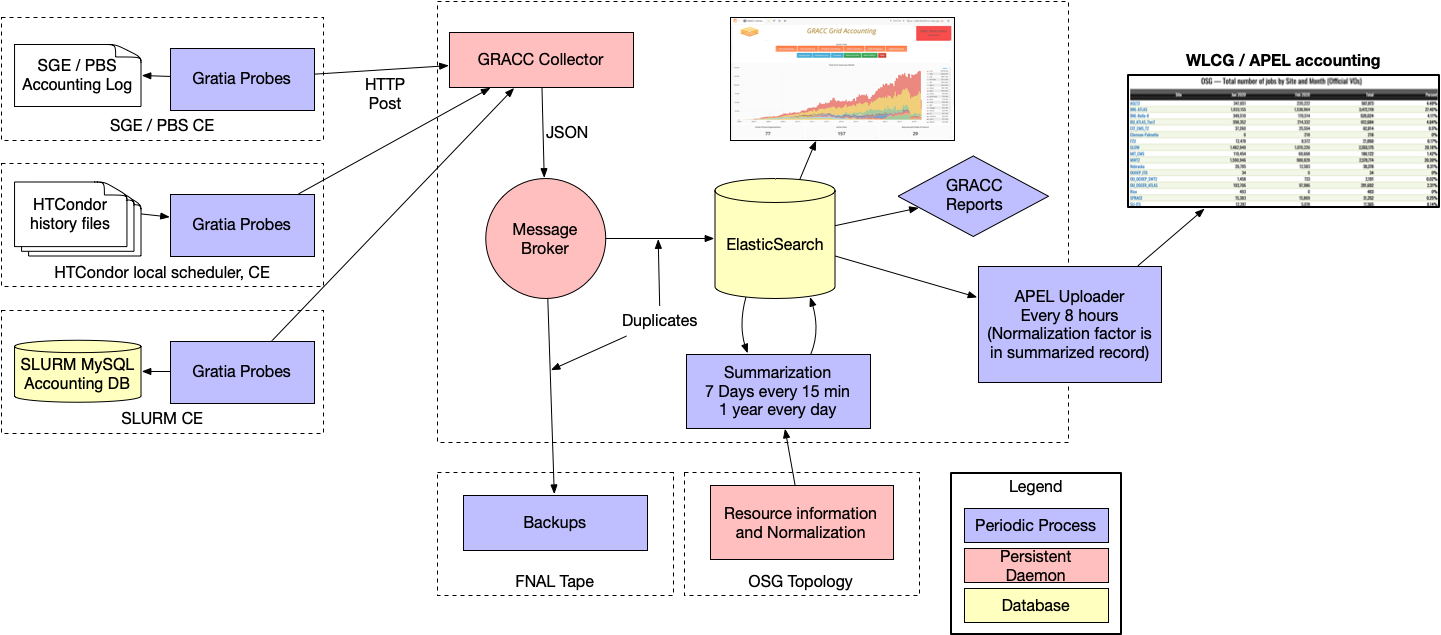

The GRACC ecosystem consists of 5 main components: probes, data collection, message broker, data sinks and visualization. It was designed for dHTC in that it supports large rates of records from a large number of different types of probes. As of Spring 2021, the GRACC message bus receives records at a rate in excess of 100Hz from close to 300 probes. A diverse set of consumers read record from the bus at more than 200 Hz.

The probes are from the original Gratia ecosystem. Each supported batch system (HTCondor, PBS, SLURM, LSF) has a corresponding probe. The probe knows how to query the batch system for data and convert it to a common format. For example, with HTCondor, the probe parses the history files of completed jobs. For the SLURM batch system, the probe queries the SLURM database for completed jobs. The resulting usage records are uploaded to the GRACC collector via an HTTP POST. All data is buffered locally until successfully sent to the GRACC data collection service. The collector service listens for incoming records, parses, and transforms the records into an internal format. It sends the transformed record to a message broker.

The message broker distributes the records to multiple destinations. The message broker based on the RabbitMQ software. It handles the durability of messaging between components in the system. It also buffers messages in case of a transient outage.

A GRACC data sink is the LogStash instances that store the data into an ElasticSearch database. The database stores the records for querying and visualization. Another data sink is the backup infrastructure. Each usage record is automatically backed up to tape in case of a failure of the database. The usage records can be replayed and the data base restored from the tape backups.

Visualization of GRACC usage records is done using either Grafana or Kibana visualization tools. The GRACC team maintains numerous preformatted dashboards displaying usage of the OSG.

References:

- Weitzel, D., Bockelman, B., Zvada, M., Retzke, K., & Bhat, S. (2019). GRACC: GRid ACcounting Collector. In EPJ Web of Conferences (Vol. 214, p. 03032). EDP Sciences. https://doi.org/10.1051/epjconf/201921403032

- Retzke, K., Weitzel, D., Bhat, S., Levshina, T., Bockelman, B., Jayatilaka, B., … & Wuerthwein, F. (2017, October). GRACC: New generation of the OSG accounting. In Journal of Physics: Conference Series (Vol. 898, No. 9, p. 092044). IOP Publishing. https://doi.org/10.1088/1742-6596/898/9/092044

- Levshina, T., Sehgal, C., Bockelman, B., Weitzel, D., & Guru, A. (2014, June). Grid accounting service: state and future development. In Journal of Physics: Conference Series (Vol. 513, No. 3, p. 032056). IOP Publishing. https://doi.org/10.1088/1742-6596/513/3/032056

Reports

The OSG Operations team has produced two reports in collaboration with the DOMA group.

Team

- Fabio Andrijauskas

- Diego Davila

- Jeff Dost

- Derek Weitzel

- Huijun Zhu

Presentations

- 16 Mar 2022 - "Updates on the OSDF Monitoring System", Derek Weitzel, OSG All-Hands Meeting 2022

- 27 Jan 2022 - "XRootD Monitoring Flow", Derek Weitzel, WLCG Operations Coordination

- 14 Dec 2021 - "XRootD Shoveler", Derek Weitzel, Tier-2 Facilities Meeting

- 3 Mar 2021 - "Moving science data: One CDN to rule them all", Derek Weitzel, OSG All Hands Meeting 2021

- 31 Jul 2020 - "High Throughput Science Workshop", Igor Sfiligoi, PEARC20

- 29 Jul 2020 - "Demonstrating a Pre-Exascale, Cost-Effective Multi-Cloud Environment for Scientific Computing", Igor Sfiligoi, PEARC20

- 28 Jun 2020 - "Running a Pre-exascale, Geographically Distributed, Multi-cloud Scientific Simulation", Igor Sfiligoi, ISC20

- 23 Apr 2020 - "OSG's use of XCache", Derek Weitzel, XCache Meeting

- 22 Apr 2020 - "XRootD Validation Plan", Derek Weitzel, USCMS S&C Blueprint Meeting

- 27 Feb 2020 - "OSG Accounting and Visualization", Derek Weitzel, IRIS-HEP Poster Session

- 28 Jan 2020 - "Hybrid, multi-Cloud HPC for Multi-Messenger Astrophysics with IceCube", Igor Sfiligoi, Google Cloud HPC Day San Diego

- 27 Jan 2020 - "Running a 380PFLOP32s GPU burst for Multi-Messenger Astrophysics with IceCube across all available GPUs in the Cloud", Igor Sfiligoi, NRP Engagement webinar

- 21 Nov 2019 - "Serving HTC Users in Kubernetes by Leveraging HTCondor", Igor Sfiligoi, KubeCon North America 2019

- 5 Nov 2019 - "A Lightweight Door into Non-grid Sites", Igor Sfiligoi, CHEP 2019

- 30 Sep 2019 - "OSG GRid ACCounting system::GRACC", Derek Weitzel, Elasticsearch Workshop @FNAL

- 17 Sep 2019 - " Nautilus & IceCUBE/LIGO", Igor Sfiligoi, Global Research Platform Workshop 2019

- 13 Sep 2019 - "The Open Science Grid", Igor Sfiligoi, PRAGMA 37

- 30 Jul 2019 - "StashCache: A Distributed Caching Federation for the Open Science Grid", Derek Weitzel, Practice and Experience in Advanced Research Computing

- 11 Jun 2019 - "OSG Data Federation", Derek Weitzel, XRootD Workshop at CC-IN2P3