Third Party Copy

LHC data is constantly beign moved between computing and storage sites to support analysis, processing, and simluation; this is done at a scale that is currently unique within the science community. For example, the CMS experiment on the LHC manages approximately 200PB of data and, on a daily basis, moves 1PB between sites. Across all four experiments, the global data movement is regularly peaks above 250Gbps in 2021 – and this was without the LHC accelerator taking new data!

The HL-LHC promises a data deluge: we will need to modernize the infrastructure to sustain at least 1Tbps by 2027 and, likely, peeking at twice that level. Historically, bulk data movement has been done with the GridFTP protocol; as the community looks to the increased data volumes of HL-LHC and GridFTP becomes increasingly niche, there is a need to use modern software, protocols, and techniques to move data. The IRIS-HEP DOMA area - in collaboration with the WLCG DOMA activity - is helping the LHC (and HEP in general) transition to using HTTP for bulk data transfer. This bulk data transfer between sites is often referred to ``third party copy” or simply TPC.

The first phase of bulk data transfer modernization is the switch from GridFTP to HTTP for TPC. The last milestone for the protocol switch project was achieved with the successful completion of the WLCG Data Challenge in October 2021. Subsequently, both CMS and ATLAS have declared that supporting GridFTP is optional (some sites, like UCSD, have already decommissioned their endpoints) and IRIS-HEP is focusing on token-based authorization of transfers for Year 5 of the project.

Data Challenge 2021

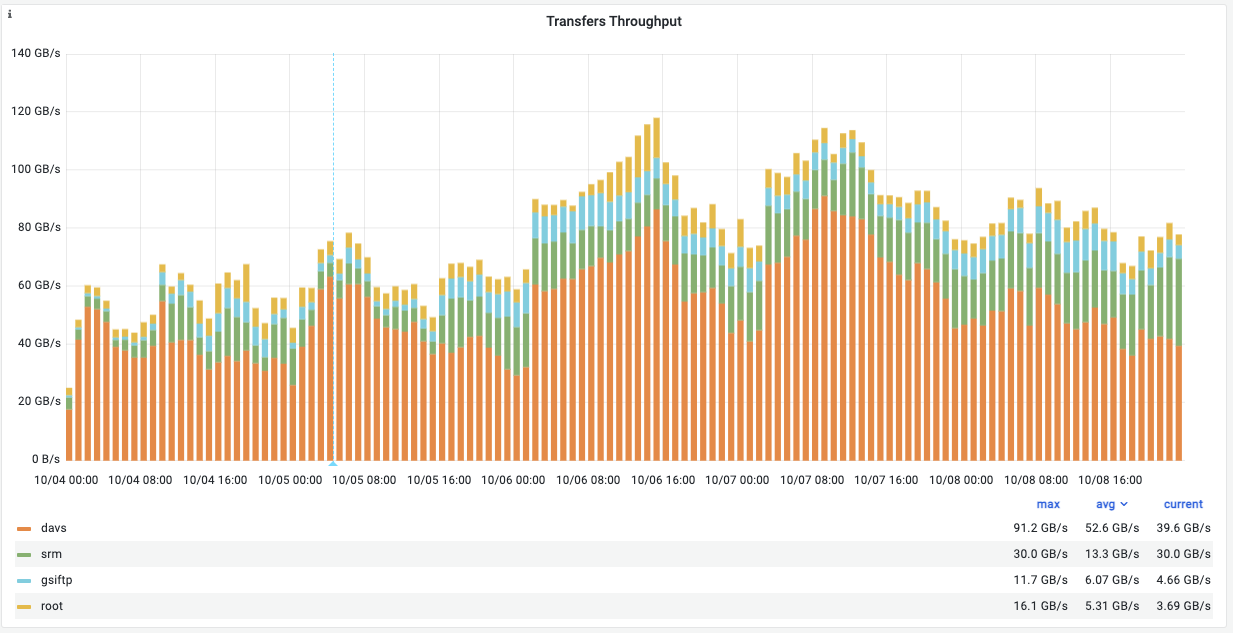

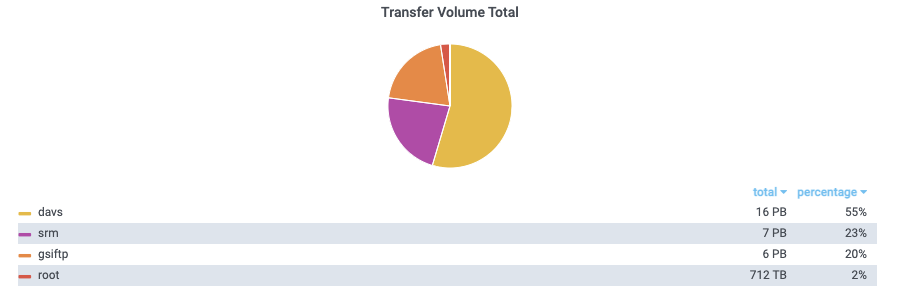

Throughput achieved per protocol during the 2021 Data Challenge. The "davs" series represents the use of HTTP-TPC and the WebDAV protocol.

In the following one can read about the different milestones established for this project and how and when they were achieved.

How fast is HTTP?

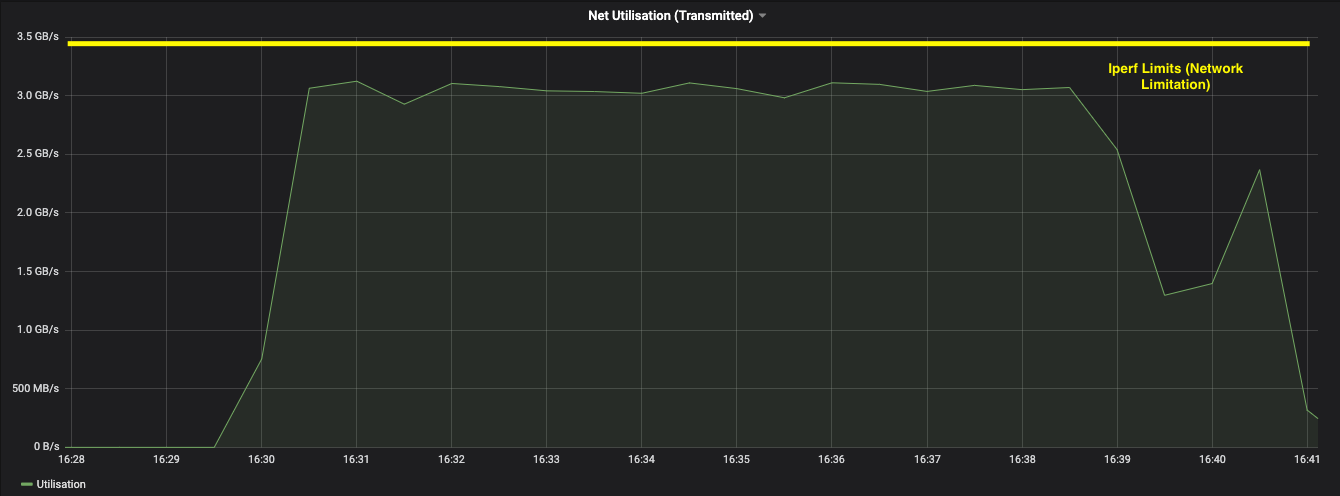

The above graph shows data movement rates (up to 24Gbps) for a single host, achieved during standalone tests; a typical LHC site will load-balance across multiple hosts in order to saturate available network links. With a sufficiently performant HTTP server, we have observed the protocol can go as quickly as the underlying network infrastructure.

During the development phase of IRIS-HEP, the team worked with a variety of

implementations to improve code and ensure interoperability. The first goal

was to get all commonly-used storage implementations for the LHC to provide

an HTTP endpoint. Initially, the goal was set to get one

site to get more that 30% of its data using the HTTP protocol. This was

accomplished in 2020; for 2021, the goal is to have every LHC site to use

HTTP-TPC.

For CMS, we picked 2 sites, Nebraska and UCSD, to be the ones leading the transition by using the HTTP-TPC protocol for all their incoming production transfers from the many sites which can support such protocol.

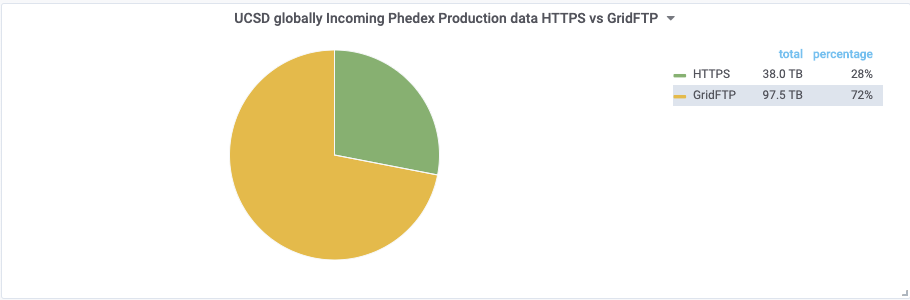

Percentage of data transferred to UCSD using GridFTP and HTTP

The above shows the amount of data transferred to UCSD using the GridFTP protocol with respect to HTTP during July 2020.

The next goal was to have a single site having 50% of all its data being transferred via HTTPS.

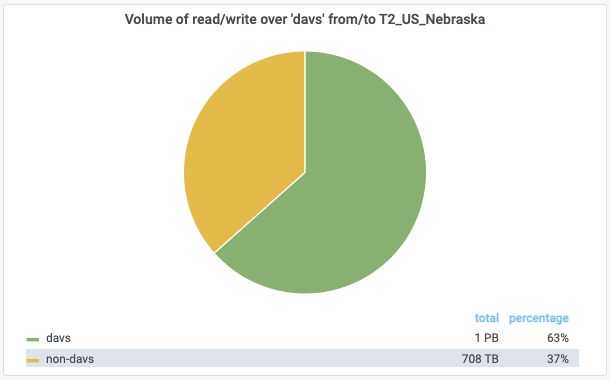

Percentage of data transferred to/from Nebraska via HTTPS vs non-HTTPS

The above shows the amount of production data transferred to and from Nebraska using HTTPS with respect to non-HTTPS during April 2021.

On the ATLAS side, the transition occurred at an even faster pace; most of their sites providede an HTTPS endpoint as of April 2021.

Protocol breakdown for transfers at all ATLAS sites

The percentage of data transferred among all ATLAS sites (excluding tape endpoints) using each of the available protocols during April 2021.

One of the 2022 milestones for this project was to demonstrate the ability to

sustain aggregate 100Gbps data flows from a source storage using HTTP-TPC.

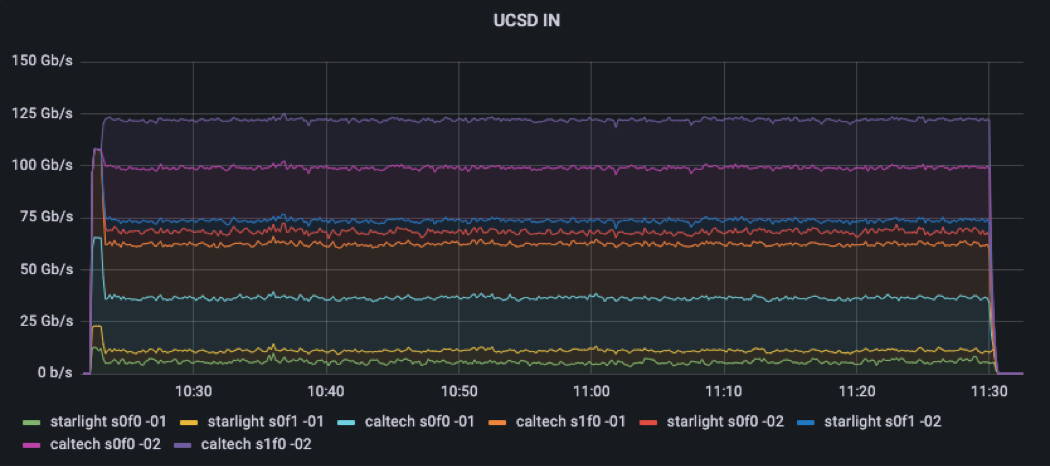

During SC21, the DOMA team demonstrated the ability to use 3 XRootD clusters in 3 different

locations, UCSD, Caltech and the Starlight point of presence in Chicago. The latter 2 clusters were used as a

source and the former as a sink. Caltech was connected to UCSD via a dedicated

100Gbps link and Starlight had two 100Gbps links available to connect to UCSD.

Using the PRP’s Nautilus cluster we were able to easily deploy the software

and configurations necessary for these experiments.

In our test we were able to reach a disk-to-disk ‘real’ transfer rate of 125Gbps out of theoretical 300Gbps network limit.

Data transfer rate achieved during SC21 Tests

This plots shows the breakdown per node and interface of the transfer rate achieved from the node at Caltech and Starlight during the SC21 tests.

The observed limitation for the SC21 demo was the CPU power available on

the Starlight cluster; in Starlight, we were only able to get 12.5% of the available bandwidth

while at Caltech’s cluster we reached 100% of its capacity.

More Information

Team

- Brian Bockelman

- Diego Davila

- Jonathan Guiang

- Aashay Arora

Presentations

- 30 Oct 2024 - "The current status of the Rucio/SENSE integration project", Aashay Arora, CMS O&C Week

- 23 Oct 2024 - "Benchmarking XRootD-HTTPS on 400Gbps Links with Variable Latencies", Aashay Arora, CHEP'24

- 22 Oct 2024 - "Data Movement Manager (DMM) for the SENSE-Rucio Interoperation Prototype", Aashay Arora, CHEP'24

- 15 Oct 2024 - "Rucio-SENSE Interoperation Prototype", Aashay Arora, CMS Rucio Meeting

- 2 Oct 2024 - "Integration between Rucio and SENSE", Diego Davila, 7th Rucio Community Workshop

- 13 Sep 2024 - "Network isolation for multi-IP exposure in XRootD", Diego Davila, XRootD and FTS Workshop

- 5 Sep 2024 - "Rucio/SENSE in SSL", Diego Davila, IRIS-HEP Institute Retreat

- 11 Apr 2024 - "Data Movement Manager for the Rucio-SENSE Interoperation Prototype", Aashay Arora, Rucio Meeting

- 27 Mar 2024 - "Rucio/SENSE during DC24", Diego Davila, S&C Blueprint Meeting

- 14 Nov 2023 - "400Gbps benchmark of XRootD HTTP-TPC", Aashay Arora, SC'23

- 9 Nov 2023 - "Rucio/SENSE in DC24", Diego Davila, Data Challenge 2024 Workshop

- 11 Oct 2023 - "Rucio/SENSE overview and our plans for DC24", Diego Davila, USCMS S&C Blueprint Meeting - DC24 preparation

- 12 Sep 2023 - "Rucio/SENSE overview and our plans for DC24", Diego Davila, IRIS-HEP Institute Retreat

- 12 Jul 2023 - "Rucio/SENSE implementation for CMS", Diego Davila, Throughput Computing 2023

- 11 May 2023 - "400Gbps benchmark of XRootD HTTP-TPC", Aashay Arora, CHEP'23

- 23 Mar 2023 - "Scaling transfers for HL-LHC, DC24 plan", Diego Davila, WLCG DOMA General Meeting

- 11 Nov 2022 - "Automated Network Services for Exascale Data Movement", Diego Davila, 5th Rucio Community Workshop

- 7 Nov 2022 - "Network Management Enhancements for the High Luminosity Era", Diego Davila, WLCG Workshop 2022

- 31 Aug 2022 - "Managed Network Services for Exascale Data Movement Across Large Global Scientific Collaborations", Diego Davila, WLCG DOMA General Meeting

- 22 Oct 2021 - "Migration to WebDAV", Diego Davila, Fall21 Offline Software and Computing Week

- 20 Jul 2021 - "Using Microsoft Azure for XRootD network benchmarking", Aashay Arora, PEARC'21 Poster Session

- 23 Jun 2021 - "OSG Xrootd Monitoring", Diego Davila, WLCG - xrootd monitoring discussion

- 18 May 2021 - "Systematic benchmarking of HTTPS third party copy on 100Gbps links using XRootD", Aashay Arora, vCHEP'21

- 12 May 2021 - "Transferring at 500Gbps with XRootD", Diego Davila, S&C Blueprint Meeting - Data Challenge

- 5 Mar 2021 - "Latest updates on the WLCG Token Transition Planning", Brian Bockelman, OSG All-Hands Meeting 2021

- 3 Mar 2021 - "HTTP Third-Party Copy: Getting rid of GridFTP", Diego Davila, OSG All-Hands Meeting 2021

- 24 Feb 2021 - "Update on the adoption of WebDAV for Third Party Copy transfers", Diego Davila, Offline and Computing Weekly meeting

- 10 Feb 2021 - "Follow-up on the WLCG Token Transition Timeline", Brian Bockelman, February 2021 GDB

- 9 Dec 2020 - "Update on OSG Token & Transfer Transition", Brian Bockelman, December 2020 GDB

- 21 Oct 2020 - "Benchmarking TPC Transfers on 100G links", Edgar Fajardo, DOMA / TPC Meeting

- 5 Aug 2020 - "Progress on transferring with HTTP-TPC", Diego Davila, Offline and Computing Weekly meeting

- 29 Apr 2020 - "OSG-LHC Technical Roadmap", Brian Bockelman, US ATLAS Computing Facility

- 19 Mar 2020 - "Update on the Globus transition", Brian Bockelman, OSG Council March 2020 Meeting

- 9 Mar 2020 - "IRIS-HEP and DOMA related activities", Brian Bockelman, WLCG DOMA F2F @ FNAL

- 27 Feb 2020 - "Modernizing the LHC’s transfer infrastructure", Edgar Fajardo, IRIS-HEP Poster Session

- 27 Feb 2020 - "Modernizing the LHC’s transfer infrastructure", Brian Bockelman, IRIS-HEP Poster Session

- 27 Nov 2019 - "Benchmarking xrootd HTTP tests", Edgar Fajardo, WLCG DOMA General Meeting

- 5 Nov 2019 - "Third-party transfers in WLCG using HTTP", Brian Bockelman, 24th International Conference on Computing in High Energy & Nuclear Physics

- 12 Jun 2019 - "XRootD and HTTP performance studies", Edgar Fajardo, XrootD Workshop@CC-IN2P3

- 28 May 2019 - "WLCG DOMA TPC Working Group", Brian Bockelman, US CMS Tier-2 Facilities May 2019 Meeting

- 6 Feb 2019 - "IRIS-HEP DOMA", Brian Bockelman, IRIS-HEP Steering Board Meeting

Publications

- Managed Network Services for Exascale Data Movement Across Large Global Scientific Collaborations, F. Wurthwein, J. Guiang, A. Arora, D. Davila, J. Graham, D. Mishin, T. Hutton, I. Sfiligoi, H. Newman, J. Balcas, T. Lehman, X. Yang, and C. Guok, Managed network services for exascale data movement across large global scientific collaborations, in 2022 4th Annual Workshop on Extreme-scale Experiment-in-the-Loop Computing (XLOOP), (Los Alamitos, CA, USA), pp. 16–19, IEEE Computer Society, November, 2022. (14 Nov 2022).

- Integrating End-to-End Exascale SDN into the LHC Data Distribution Cyberinfrastructure, Jonathan Guiang, Aashay Arora, Diego Davila, John Graham, Dima Mishin, Igor Sfiligoi, Frank Wuerthwein, Tom Lehman, Xi Yang, Chin Guok, Harvey Newman, Justas Balcas, and Thomas Hutton. 2022. Integrating End-to-End Exascale SDN into the LHC Data Distribution Cyberinfrastructure. In Practice and Experience in Advanced Research Computing (PEARC '22). Association for Computing Machinery, New York, NY, USA, Article 53, 1–4. https://doi.org/10.1145/3491418.3535134 (08 Jul 2022).

- Systematic benchmarking of HTTPS third party copy on 100Gbps links using XRootD, Fajardo, Edgar, Aashay Arora, Diego Davila, Richard Gao, Frank Würthwein, and Brian Bockelman, arXiv:2103.12116 (2021). (Submitted to CHEP 2019) (22 Mar 2021).

- WLCG Authorisation from X.509 to Tokens, Brian Bockelman and Andrea Ceccanti and Ian Collier and Linda Cornwall and Thomas Dack and Jaroslav Guenther and Mario Lassnig and Maarten Litmaath and Paul Millar and Mischa Sallé and Hannah Short and Jeny Teheran and Romain Wartel, arXiv:2007.03602 [cs.CR] (Submitted to CHEP 2019) (08 Nov 2019).

- Third-party transfers in WLCG using HTTP, Brian Bockelman and Andrea Ceccanti and Fabrizio Furano and Paul Millar and Dmitry Litvintsev and Alessandra Forti, arXiv:2007.03490 [cs.DC] (Submitted to CHEP 2019) (08 Nov 2019).