How did the universe begin? Why is there something instead of nothing? What is reality composed of at the smallest of scales?

These are just some of the profound questions that high-energy physics seeks to answer. To get closer to the answers, the field needs new generations of talented researchers able to wield the latest in software technologies to parse ever larger and more complicated datasets.

Nurturing this next generation is a goal of the Institute for Research and Innovation in Software for High Energy Physics (IRIS-HEP), a $25M software institute funded by the National Science Foundation and headquartered within the Princeton Institute for Computational Science and Engineering (PICSciE). Through the IRIS-HEP fellowship program, undergraduate students get the chance to work on projects that develop their programming skills.

This story is the third in a series about gap year IRIS-HEP fellows and how the program has helped shape their careers.

Growing up, Elliott Kauffman recalls being keenly into building things and solving puzzles. This general fascination suddenly came into tight focus, though, thanks to a documentary he watched in middle school about the discovery of the Higgs boson. Nicknamed the “god particle” for its fundamental bestowing of mass to matter, the Higgs was confirmed in 2012 at the Large Hadron Collider (LHC), the most powerful particle collider and largest machine humans have ever built.

Upon learning these astounding facts, Kauffman was hooked. “I didn’t really know what physics was, but after watching the Higgs boson documentary, I was like ‘Wait, that’s super cool,’” says Kauffman. “I think it’s amazing how physicists can break down our universe into the smallest components and investigate them.”

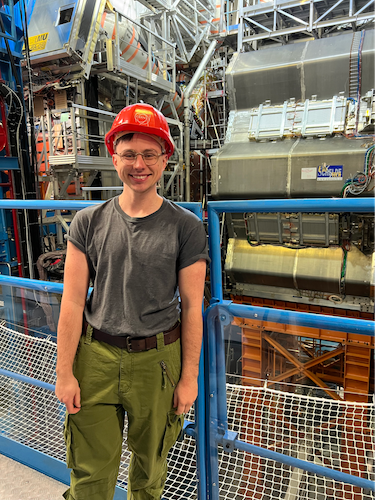

That passion has translated into Kauffman currently attending Princeton University as a PhD student in physics. Instrumental to Kauffman’s success has been his experiences with IRIS-HEP, initially as a summer fellow and then during a gap year in his studies after earning his undergraduate degree.

“I didn’t have much of a computer science background before, and that’s a big advantage to have when you want to work in high-energy physics,” says Kauffman. “I felt like developing those skills in IRIS-HEP helped me a long way, and it’s still helping me to this day.”

Elliott Kauffman giving a talk at 26th International Conference on Computing in High Energy & Nuclear Physics (CHEP) 2023 in Norfolk, VA. Photo Credit: Oksana Shadura, University of Nebraska-Lincoln.

Kauffman first joined IRIS-HEP in the summer of 2021 following his junior year at Duke University. Over the course of that fellowship at CERN — the home of the LHC in Geneva, Switzerland — Kauffman worked on a project called PV Finder. The “PV” stands for “primary vertex,” referring to the point where two particles smash into each other as they are whipped around inside a particle accelerator. These collisions shatter the matter into constituent particles, which can fleetingly recombine to yield massive, short-lived particles. The decay chains of these collision-generated, ephemeral particles, such as the Higgs boson, are subsequently captured by instrument detectors. Using computer software-enabled techniques, researchers can work backwards through that decay chain to gauge the properties of the various permutations of matter involved.

Figuring out exactly where a decay chain started is integral for conducting such a retrospective analysis. As a deep-learning algorithm, PV Finder relies on machine learning to reconstruct PVs, based on the most likely tracks taken by propagating particles. In this way, PV Finder helps untangle a complex mess of multiply interacting particle shrapnel into coherent data.

Working with Princeton computational physicist and IRIS-HEP member Henry F. Schreiner, along with colleagues at other universities, Kauffman helped extend PV Finder from its original detector experiment, the LHCb, for use on two additional, general-purpose LHC detector experiments called CMS (Compact Muon Solenoid) and ATLAS. Kauffman explains that the latter two pose challenges because they host approximately 10 times as many particle collisions as LHCb, meaning loads more raw data to distill. “It’s a more difficult problem with CMS and ATLAS because you have a lot more pileup,” says Kauffman.

The challenge will only grow greater as the LHC continues undergoing a major revamp, culminating in the High-Luminosity (HL) LHC program and slated for an observation run starting in 2030. Compared to the mammoth particle collider’s current design, HL-LHC will boost particle collisions by some 5 to 7.5 times, significantly enhancing data yield and discovery potential. This greater performance will be achieved by installing new equipment—including powerful magnets and superconducting power lines—that make the particle beam more focused and intense. The upshot will be denser bunches of particles flying through one another, increasing the odds of desired collisions.

Elliott during a visit to India. Photo Credit: Elliott Kauffman, Princeton University.

In his second fellowship with IRIS-HEP, Kauffman went on to assist further with this next iteration of the LHC. This second experience came about as Kauffman reflected on his professional trajectory. During his senior year at Duke, Kauffman felt overwhelmed by his studies and thought it best to take a break after graduation. Through Mike Sokoloff, a physicist at the University of Cincinnati and one of Kauffman’s IRIS-HEP mentors, Kauffman then learned about gap year fellowship opportunities at CERN. Intrigued, Kauffman successfully applied and started in October 2022.

“I figured that being at CERN for a year, I would have a good idea of whether I wanted to do high-energy physics or not. And the answer was ‘yes,’” Kauffman says. “As soon as I got to CERN, I realized I’m surrounded by people who all work on high energy physics, and they’re so passionate and excited. This is where I belong.”

Kauffman proceeded to work on a software initiative called the Analysis Grand Challenge. The initiative seeks to unite various efforts within IRIS-HEP into an analysis pipeline for high-energy physics data—especially for the gargantuan amounts the HL-LHC will churn out. Kauffman specifically helped in adding machine learning into the pipeline to speed up number crunching and data presentation for users.

“These projects are geared toward what analysis might look like during High-Lumi LHC,” says Kauffman, deploying a shorthand phrase for the upgraded LHC. “It’ll be cool to see a lot of the tools we’re developing now actually pay off and be put into practice.”

Looking ahead in his career, Kauffman is highly interested in being part of that practice for HL-LHC analysis as data pour in during the 2030s. He is excited for what the future holds and is grateful for the network of professional connections he has made through IRIS-HEP.

“I still find being in contact with IRIS-HEP people very useful because they’re all so passionate and available,” Kauffman says. “I know whenever I have a question about something, I know who to ask and who to go to.”