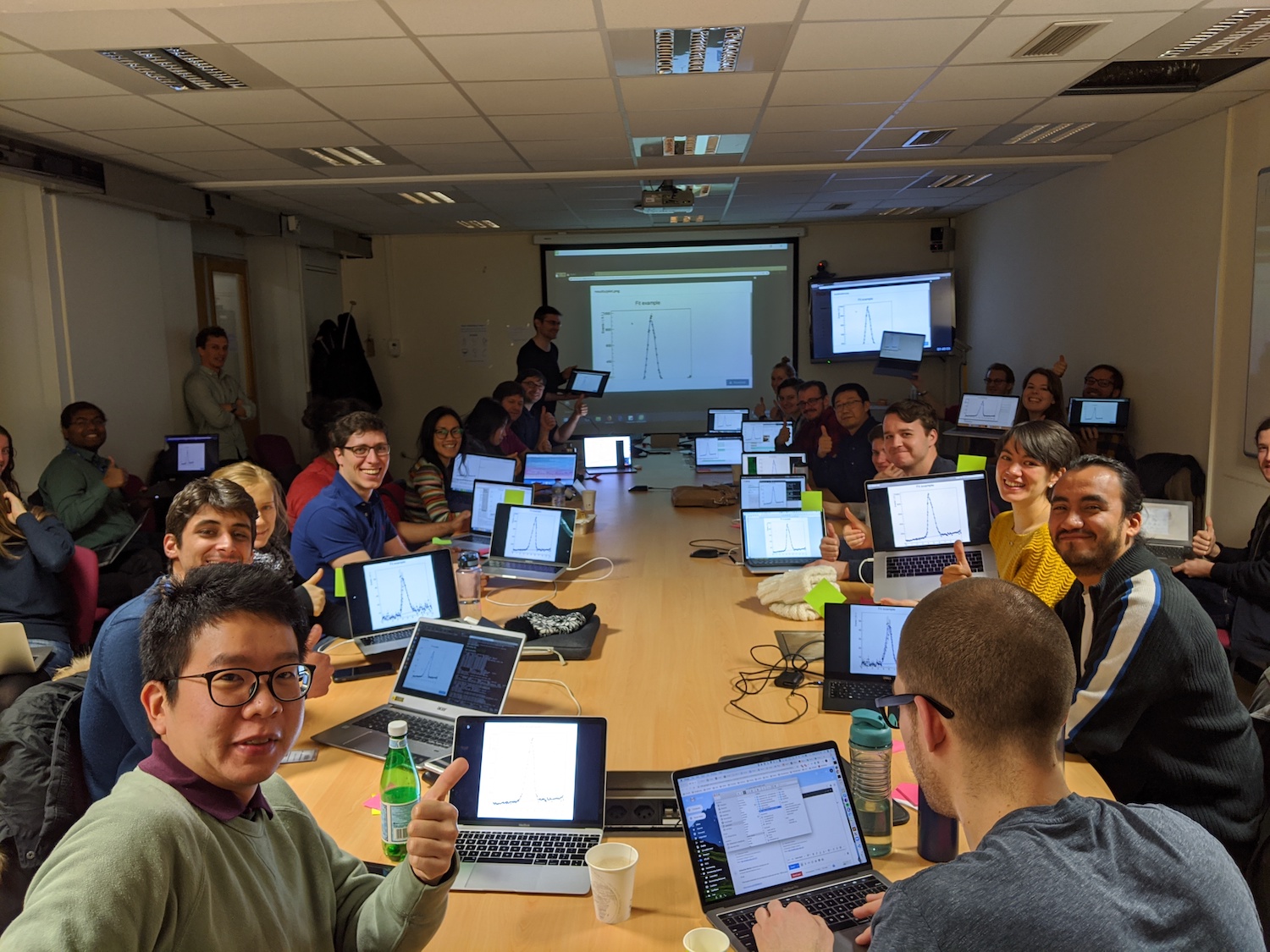

One of the cross-cutting themes of IRIS-HEP’s Analysis Systems effort is to promote analysis preservation and reuse. This includes streamlining how we capture analysis code as it is being developed as well as creating analysis systems that allow us to efficiently reuse those components. To learn how to properly archive such software-heavy data analyses, 30 young graduate students and postdocs gathered at CERN for a three-day intensive “Analysis Preservation Bootcamp” made possible through the funds of IRIS-HEP. Organized by Sam Meehan, Lukas Heinrich, Clemens Lange (CERN) and IRIS-HEP postdoc Savannah Thais (Princeton), the workshop participants could count on a great team of volunteer mentors to help them through any technical difficulties.

The goal of analysis preservation is not only to ensure reproducibility, but also to extend the impact of the analyses performed by LHC experimentalists. The technologies streamline the on-boarding process for new collaborators, aid in the analysis development process, and can allow for theorists to reinterpret the results of previous searches.

“I thought it was super useful, I feel way less overwhelmed by discussions about these tools and do feel empowered to make changes/improvements to the setup my analysis already uses,” remarked one participant in an exit survey.

Instructors Danika MacDonnel and Giordon Stark working with participants. Photo Credit: Samuel Meehan.

These efforts also complement open data initiatives in a vital way, as outlined in the opinion piece Open is not enough. This is a long-running theme of NSF-funded projects including DASPOS and DIANA/HEP. Those projects supported early work targeted at RECAST, a proposed service for reinterpreting the results of searches at the LHC.

While the publications themselves offer already a great deal of detail about the studies, the huge amounts of data collected at the LHC implies that the real logic of the analyses is often encoded in software – from the precise way in which collisions are selected to the statistical treatment leading to the final measurement.

Several technical challenges had to be overcome in order to make this service a reality. The solution that emerged involved leveraging containerization technology used widely in cloud computing as well as workflow technologies. These technologies were abstracted from their high-energy physics origins into the REANA product now primarily developed at CERN with contributions from various NSF-funded projects like DASPOS, DIANA/HEP, IRIS-HEP, and SCAILFIN.

On the first two days, students learned to efficiently use modern software engineering and cloud computing tools such as version control, continuous integration, and Docker containers to ensure the analysis code and its development history are properly archived and portably packaged to be used on any cloud computing platform. On the third and final day, the students heard from Tibor Simko of the REANA project, where they learned how to define computational workflows to preserve the order of analysis steps required to carry the analysis from initial event selection all the way to final result. Among other things, REANA is used as a computational backend for the reinterpretation framework RECAST and thus will play a key role in ongoing summary studies at the LHC.

With the second run of the LHC finished and the upgrade for Run-3 underway, some time will pass until the four LHC experiments have collected enough new data to significantly increase the sensitivity to Beyond Standard Model phenomena or the precision of Standard Model measurements. In turn, this implies that the current analyses being finalized on the full Run-2 dataset will be the most precise studies at LHC-scale physics for a long time. It is thus crucial that the details of those analysis are fully preserved. While it is important to be able to reliably reproduce the published results, it the ability to reuse analysis allows for reinterpretations and new meta-analyses studying the overall impact of the LHC experiments on our understanding of nature. This is one reason why ATLAS now requires all searches for new physics to be archived in such a reusable manner.

Instructor Giordon Stark working with participants. Photo Credit: Samuel Meehan.

Acknowledgements

Organizers:

- Clemens Lange

- Lukas Alexander Heinrich

- Samuel Ross Meehan

- Savannah Jennifer Thais

Instructors :

- Giordon Stark

- Danika MacDonnel

- Tibor Simko

Mentors :

- Emma Torro

- Mason Profitt

- Leonora Vesterbacka

- Stefan Wunsch

- Thea Aarrestad

- Brendan Regnery

- Rokas Maciulaitis

- Diego Rodriguez Rodriguez

- Marco Vidal

Reactions from participants shared on twitter

Thoroughly enjoying myself at an @iris_hep/@diana_hep analysis preservation bootcamp @CERN today! https://t.co/AGNpmuHX1X pic.twitter.com/b0D1TfKSXv

— Josh McFayden (@JoshMcFayden) February 17, 2020

Today: REANA ✅ pic.twitter.com/s98F8z8QFk

— Josh McFayden (@JoshMcFayden) February 19, 2020

And the result of all this hard work using @reanahub? (Re)discovering Higgs→ττ: pic.twitter.com/1fevgqWwaR

— Josh McFayden (@JoshMcFayden) February 19, 2020

Related events

Today is #Docker day at the #USATLASComputingBootcamp! This morning I taught an introduction to Docker and this afternoon Danika Macdonell is teaching how to use Docker in ATLAS’s #RECAST for analysis preservation and reinterpretation. 👍🚀 https://t.co/HTXCuU2AJH pic.twitter.com/UDr9i9nFVK

— Matthew Feickert (@HEPfeickert) August 22, 2019