Coffea-Casa Analysis Facility

The HL-LHC era will represent more than order-magnitude increase of event counts for analysts. The increased data volume will force physicists to adopt new methods and approaches; what fit comfortably on a laptop for LHC will require a distributed system for the next generation.

Coffea-Casa is a prototype analysis facility, which provides services for “low latency columnar analysis”, enabling rapid processing of data in a column-wise fashion. This provides an interactive experience and quick “initial results” while scaling to the full scale of datasets.

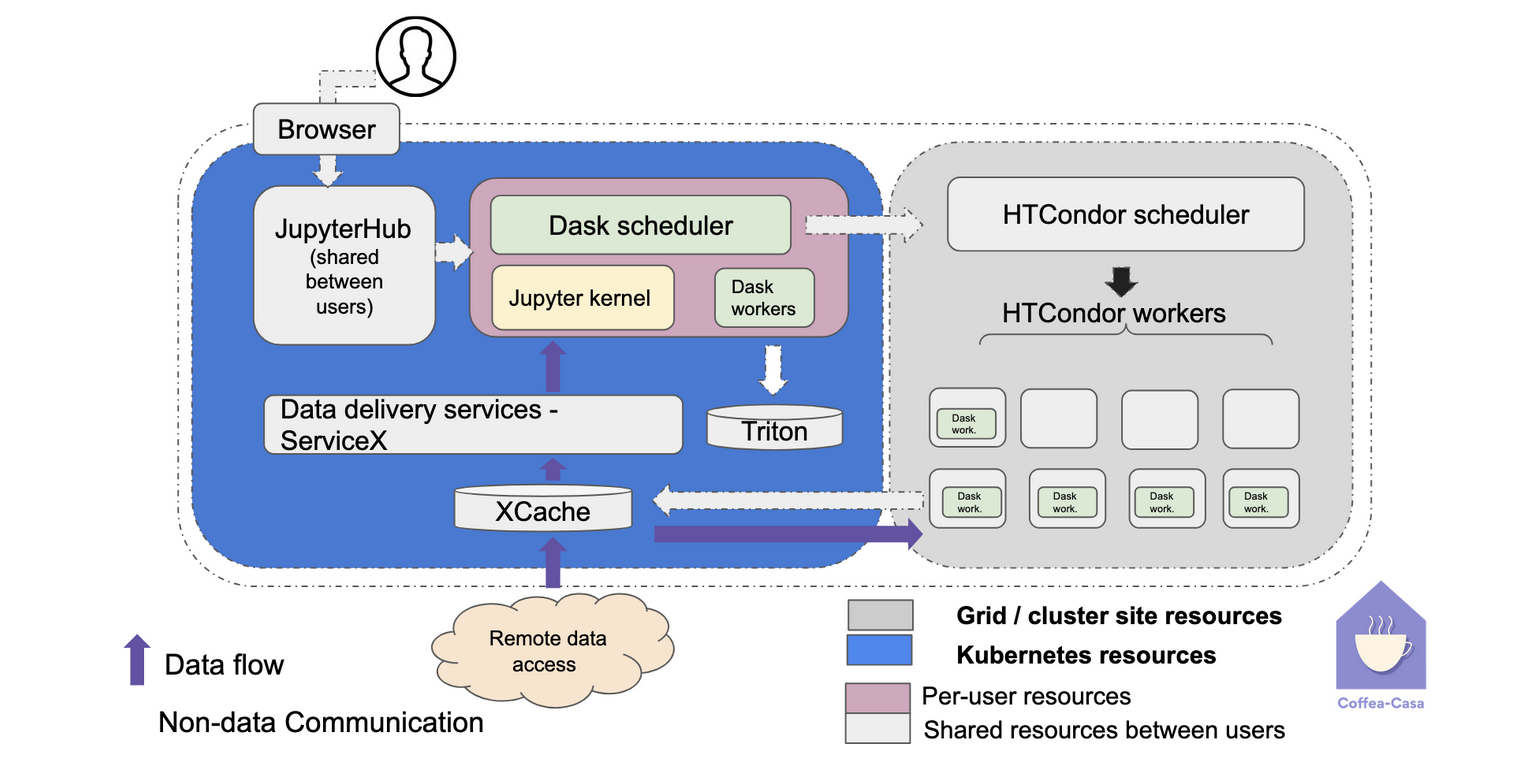

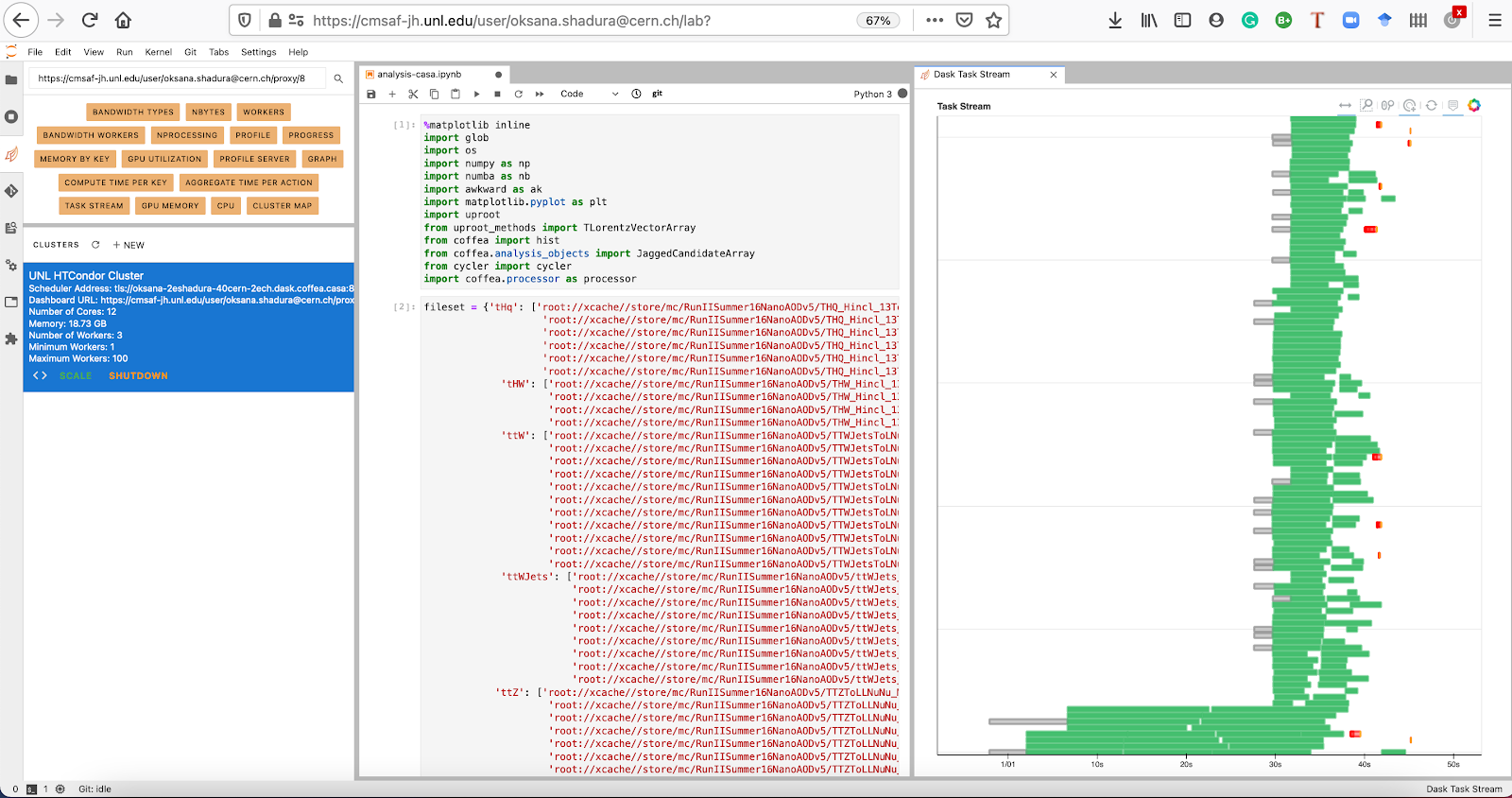

These services, based on the Dask parallelism library and Jupyter notebooks, aim to dramatically lower the time for analysis and provide an easily scalable and user-friendly computational environment that will simplify and accelerate the delivery of particle physics measurements. The facility is built on top of a Kubernetes cluster and integrates dedicated resources along with resources allocated via fairshare through the local HTCondor system. In addition to the user-facing interfaces such as Dask, the facility also manages access control through a common single-sign-on authentication & authorization for data access (the data access strategy aligns with the new authorization technologies used by OSG-LHC).

After authentication (e.g., via the CERN SSO), the user is presented with a Jupyter notebook interface that can be populated with code from a Git repository specified by the user. When the notebook is executed, the processing automatically scales out to available resources (such as the Nebraska Tier-2 facility for the SSL instance at Nebraska), giving the user transparent interactive access to a large computing resource. The CMS instance of the facility has access to the entire CMS data set, thanks to the global data federation and local caches. It supports the Coffea framework, which provides a declarative programming interface that treats the data in its natural columnar form.

An important feature is access to a “column service” like ServiceX; if a user is working with a compact data format (such as CMS NanoAOD or ATLAS PHYSLITE) that is missing a data element that the user needs, the facility can be used to serve that “column” from a remote site. This allows only the compact data formats to be stored locally and augmented only as needed, a critical strategy for CMS and ATLAS to control the costs of storage in the HL-LHC era.

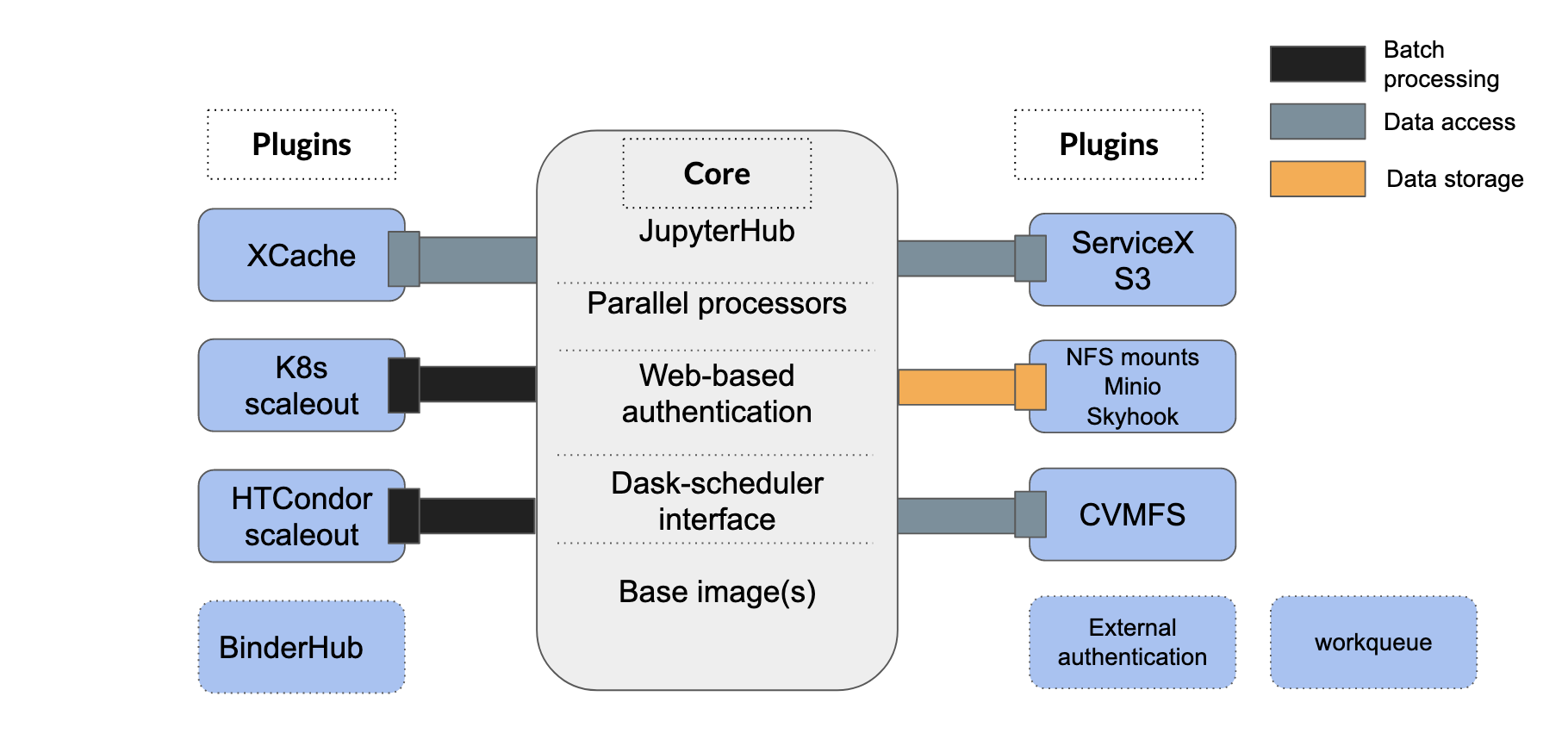

Core software components and other developed plugins that were used in the design of Coffea-Casa analysis facility:

Coffea-casa repositories and related resources

More information could be found in the corresponding repository:

Recent accomplishments and plans

Recent accomplishments:

- The CMS facility, deployed at the Nebraska Tier-2 center, is accommodating first CMS users: try it!. More then 150 users have used the CMS facility over last years.

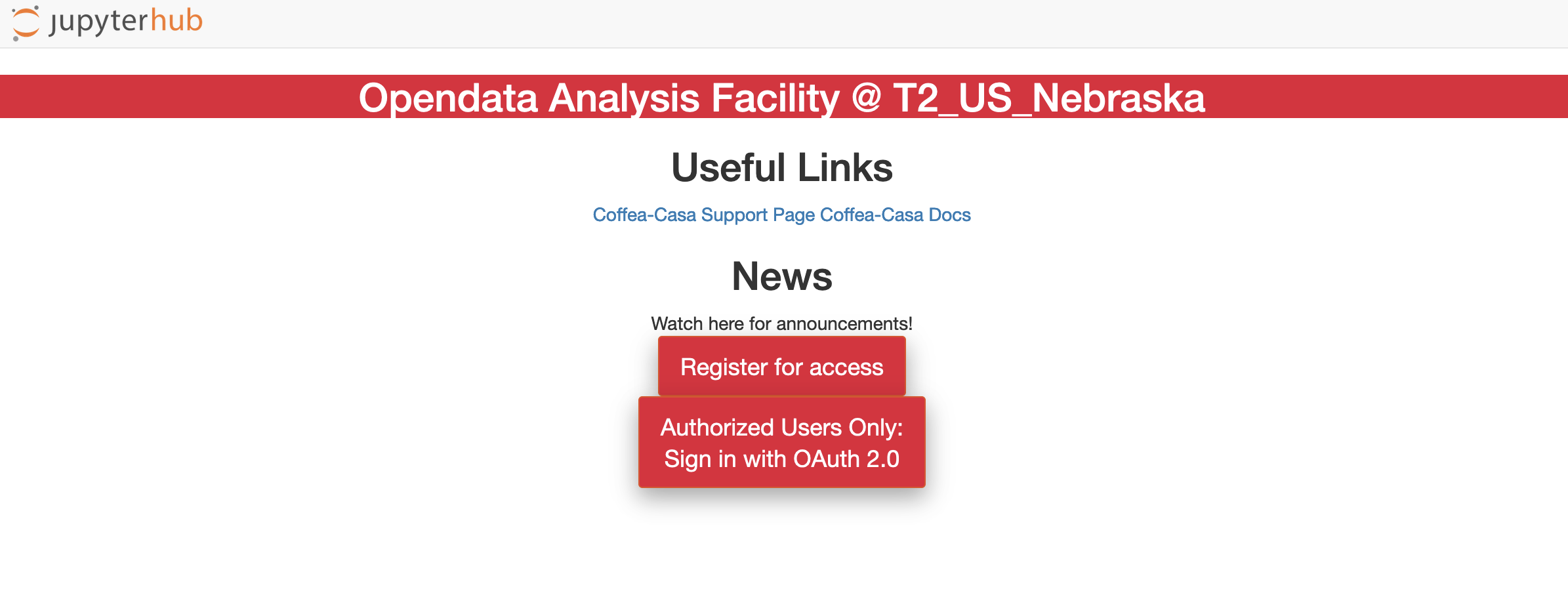

- For non-CMS users, we have enabled the Opendata

coffea-casafacility: try it!. More then 60 users have used the Opendata facility over last years.

-

For ATLAS physicists, an ATLAS Coffea-Casa analysis facility instance has been deployed at the University of Chicago.

-

The coffea-casa analysis facility is a key component for IRIS-HEP Analysis Grand Challenge preparations.

-

Both the Opendata

coffea-casaanalysis facility at the University of Nebraska-Lincoln and ATLAS analysis facility instance at the University of Chicago were used to showcase various Python analysis packages and services for the Analysis Grand Challenge Tools workshop 2021 and Analysis Grand Challenge Tools workshop 2022.

Future plans for 2023:

- Recruit more physics analysis groups to facility use.

- Benchmark various software components and packages deployed at Coffea-Casa analysis facilities at the University of Nebraska-Lincoln and at the University of Chicago.

- Prepare and execute the Analysis Grand Challenge at Coffea-Casa Analysis Facilities deployed at the University of Nebraska-Lincoln and the University of Chicago and as well other facilities.

Recent videos and tutorials

- The Coffea-Casa analysis facility demo - Youtube video at Analysis Grand Challenge Tools workshop 2022

- The Coffea-Casa analysis facility demo “Scale-out with coffea: coffea-casa” - Youtube video at Analysis Grand Challenge Tools workshop 2021

- The Coffea-Casa analysis facility introduction - Youtube video at PyHEP 2020

- The Coffea-Casa tutorial “Coffea columnar analysis at scale” - Youtube vide at PyHEP 2020

Fellows

Team

- Oksana Shadura

- Ken Bloom

- Carl Lundstedt

- John Thiltges

- Brian Bockelman

- Garhan Attebury

Presentations

- 29 Jul 2025 - "Integration Challenge - Next Steps", Oksana Shadura, IRIS-HEP Steering Board Meeting

- 10 Jun 2025 - "200 Gbps Challenge v2", Oksana Shadura, US CMS Analysis Facility Meeting

- 15 May 2025 - "Run-3 CMS compute model", Oksana Shadura, Physics analysis at the HL-LHC

- 15 May 2025 - "CMS survey results", Oksana Shadura, Physics analysis at the HL-LHC

- 6 May 2025 - "CMS analysis facility contribution", Oksana Shadura, WLCG/HSF Workshop 2025

- 8 Apr 2025 - "200 Gbps Challenge", Oksana Shadura, US CMS Analysis Facility Meeting

- 24 Oct 2024 - "Tuning the CMS Coffea-casa facility for 200 Gbps Challenge", Oksana Shadura, Conference on Computing in High Energy and Nuclear Physics (CHEP 2024)

- 24 Oct 2024 - "LHCC Focus Session on WLCG - Analysis Facilities", Oksana Shadura, O&C Weekly Meeting

- 4 Sep 2024 - "AGC & IDAP / 200 Gbps", Oksana Shadura, IRIS-HEP Institute Retreat 2024

- 2 Sep 2024 - "Facilities R&D HSF highlights", Oksana Shadura, The 8th Asian Tier Center Forum

- 18 Jun 2024 - "Analysis Grand Challenge", Oksana Shadura, Analysis Facilities Workshop

- 11 Jun 2024 - "Coffea-casa and 200 Gbps challenge - experience with Kubernetes", Oksana Shadura, 2024 All-Hands Workshop of the U.S. CMS Software and Computing Operations Program

- 9 Jun 2024 - "The 200 Gbps Challenge at Nebraska", Oksana Shadura, US CMS Analysis Facility Meeting

- 8 May 2024 - "Notes about AF users UX feedback", Oksana Shadura, Common Analysis Tools (CAT) general meeting

- 19 Mar 2024 - "View from HSF - HSF AF White Paper Overview", Oksana Shadura, CMS Spring 2024 Offline and Computing Week

- 15 Mar 2024 - "Introduction - IRIS-HEP Data Analysis Pipeline (IDAP)", Oksana Shadura, IRIS-HEP Data Analysis Pipeline (IDAP) meeting

- 5 Mar 2024 - "Analysis Grand Challenge (AGC)", Oksana Shadura, US CMS Analysis Facility Meeting

- 10 Jan 2024 - "AGC Deep Dive", Oksana Shadura, NSF / IRIS-HEP Meeting (January 2024)

- 5 Dec 2023 - "Updates on Coffea-Casa AF", Oksana Shadura, Common Analysis Tools (CAT) general meeting

- 14 Sep 2023 - "AGC Team End-to-End Demo", Alexander Held, IRIS-HEP AGC Demonstration 2023

- 12 Sep 2023 - "Future Analysis Facilities R&D", Oksana Shadura, IRIS-HEP Institute Retreat

- 12 Sep 2023 - "Current Plans for AGC", Oksana Shadura, IRIS-HEP Institute Retreat

- 11 Sep 2023 - "Focus Area - Analysis Grand Challenge", Oksana Shadura, IRIS-HEP Institute Retreat

- 27 Jul 2023 - "Analysis Grand Challenge & Coffea-Casa analysis facility as a test environment for packages and services", Oksana Shadura, PyHEP.dev 2023 - "Python in HEP" Developer's Workshop

- 24 Jul 2023 - "Analysis Grand Challenge Demo - Hands-on Demo Session", Oksana Shadura, Computational HEP Traineeship Summer School

- 11 Jul 2023 - "Analysis Grand Challenge", Oksana Shadura, IRIS-HEP / Ops Program Analysis Grand Challenge Planning

- 24 May 2023 - "Coffea-casa analysis facility", Oksana Shadura, Common Analysis Tools (CAT) general meeting (CMS internal)

- 9 May 2023 - "Coffea-Casa - Building composable analysis facilities for the HL-LHC", Oksana Shadura, 26th International Conference on Computing in High Energy & Nuclear Physics

- 5 May 2023 - "Analysis Grand Challenge workshop closing", Alexander Held, IRIS-HEP Analysis Grand Challenge workshop 2023

- 3 May 2023 - "Analysis Grand Challenge workshop introduction", Alexander Held, IRIS-HEP Analysis Grand Challenge workshop 2023

- 23 Mar 2023 - "Coffea-casa analysis facility", Oksana Shadura, International Symposium on Grids & Clouds (ISGC) 2023 in conjunction with HEPiX Spring 2023 Workshop

- 23 Mar 2023 - "Physics analysis workflows and pipelines for the HL-LHC", Alexander Held, International Symposium on Grids & Clouds (ISGC) 2023

- 14 Mar 2023 - "Analysis Grand Challenge updates", Alexander Held, IRIS-HEP / Ops Program Analysis Grand Challenge Planning

- 5 Mar 2023 - "CMS O&C CDR - Evolution of Analysis Facilities", Oksana Shadura, CMS O&C Upgrade R&D on Workflow Management and Computing Infrastructure

- 24 Jan 2023 - "Analysis Grand Challenge updates", Alexander Held, IRIS-HEP / Ops Program Analysis Grand Challenge Planning

- 15 Nov 2022 - "Analysis Grand Challenge updates", Oksana Shadura, IRIS-HEP / Ops Program Analysis Grand Challenge Planning

- 3 Nov 2022 - "IRIS-HEP AGC update", Alexander Held, HSF Analysis Facilities Forum

- 25 Oct 2022 - "First performance measurements with the Analysis Grand Challenge", Oksana Shadura, ACAT 2022

- 12 Oct 2022 - "AGC - Perspective from focus areas and projects", Oksana Shadura, IRIS-HEP Institute Retreat

- 12 Oct 2022 - "AGC overview, status and plans", Alexander Held, IRIS-HEP Institute Retreat

- 4 Oct 2022 - "Analysis Grand Challenge updates", Oksana Shadura, IRIS-HEP / Ops Program Analysis Grand Challenge Planning

- 23 Aug 2022 - "Analysis Grand Challenge updates", Alexander Held, IRIS-HEP / Ops Program Analysis Grand Challenge Planning

- 16 Jul 2022 - "Analysis Facilities", Oksana Shadura, Seattle Snowmass Summer Meeting 2022

- 12 Jul 2022 - "Report from Analysis Ecosystems II Workshop", Oksana Shadura, Software & Computing Round Table (2022)

- 14 Jun 2022 - "Analysis Grand Challenge updates", Alexander Held, IRIS-HEP / Ops Program Analysis Grand Challenge Planning

- 25 May 2022 - "Analysis Facilities - Summary", Oksana Shadura, Analysis Ecosystem Workshop II

- 23 May 2022 - "Analysis user experience with the Python HEP ecosystem", Jim Pivarski, Analysis Ecosystems Workshop II

- 3 May 2022 - "Next steps for coffea-casa AF", Oksana Shadura, https://docs.google.com/presentation/d/1rINHkaqozp-RD9Bu-fWZtbQ1ktocw8d5fQ1sFMRjW7k/edit?usp=sharing

- 3 May 2022 - "AGC at US CMS AFs", Carl Lundstedt, IRIS-HEP Analysis Grand Challenge workshop 2023

- 25 Apr 2022 - "IRIS-HEP Analysis Grand Challenge Tools Workshop", Oksana Shadura, RIS-HEP AGC Tools 2022 Workshop

- 25 Apr 2022 - "Scale-out with coffea: coffea-casa analysis facility", Carl Lundstedt, IRIS-HEP AGC Tools (April) 2022 Workshop

- 8 Apr 2022 - "CompF4 Analysis Facility - Discussion & Priorities", Oksana Shadura, Snowmass CompF4 Topical Group Workshop

- 5 Apr 2022 - "Analysis Grand Challenge updates", Oksana Shadura, IRIS-HEP / Ops Program Analysis Grand Challenge Planning

- 1 Apr 2022 - "Report about HSF Analysis Facilities Forum Kick-off meeting", Oksana Shadura, CMS Spring 2022 O&C Week

- 1 Mar 2022 - "Analysis Grand Challenge updates", Alexander Held, IRIS-HEP / Ops Program Analysis Grand Challenge Planning

- 28 Jan 2022 - "Analysis Grand Challenge updates", Oksana Shadura, IRIS-HEP / Ops Program Analysis Grand Challenge Planning

- 16 Dec 2021 - "Analysis Grand Challenge updates", Oksana Shadura, IRIS-HEP Executive Board / Ops Program Grand Challenge Discussion

- 30 Nov 2021 - "Analysis Grand Challenge updates", Alexander Held, Steering Board Meeting

- 22 Nov 2021 - "Coffea-casa news and developments", Oksana Shadura, Coffea Users Meeting

- 17 Nov 2021 - "Deep Dive - Analysis Grand Challenge", Oksana Shadura, NSF / IRIS-HEP Meeting (November 2021)

- 9 Nov 2021 - "Analysis Facilities", Oksana Shadura, CMS Operations & Computing R&D meeting

- 4 Nov 2021 - "Scale-out with coffea", Oksana Shadura, IRIS-HEP AGC Tools 2021 Workshop

- 2 Nov 2021 - "Analysis Grand Challenge", Oksana Shadura, SwiftHep/ExcaliburHep workshop

- 24 Sep 2021 - "Coffea-casa - an analysis facility prototype", Oksana Shadura, Joint AMG and WFMS Meeting on Analysis Facilities

- 9 Jun 2021 - "Advances in Analysis tools/ecosystem", Oksana Shadura, 9th Edition of the Large Hadron Collider Physics Conference

- 21 May 2021 - "Dask in High-Energy Physics community (workshop)", Oksana Shadura, Dask Distributed Summit 2021

- 21 May 2021 - "Dask at U.S.CMS analysis facilities", Carl Lundstedt, Dask Distributed Summit 2021, Dask in High Energy Physics Community, Tutorials and Workshops

- 20 May 2021 - "Coffea-casa an analysis facility prototype (plenary)", Oksana Shadura, 25th International Conference on Computing in High-Energy and Nuclear Physics

- 19 May 2021 - "Challenges Designing Interactive Analysis Facilities with Dask", Oksana Shadura, Dask Distributed Summit 2021

- 3 Feb 2021 - "Future analysis facilities", Oksana Shadura, CMS Week

- 24 Nov 2020 - "U.S. CMS Managed Analysis Facilities", Oksana Shadura, HSF WLCG Virtual Workshop

- 27 Oct 2020 - "Analysis on LHC-Managed Facilities: Coffea-Casa", Oksana Shadura, IRIS-HEP Future Analysis Systems and Facilities Blueprint Workshop

- 23 Sep 2020 - "Analysis facilities", Oksana Shadura, Upgrade R&D/CMP Meeting (Presented on Weekly CMS O&C Meeting slot)

Publications

- Collaborative Computing Support for Analysis Facilities Exploiting Software as Infrastructure Techniques, M. Flechas, G. Attebury, K. Bloom, B. Bockelman, L. Gray, B. Holzman, C. Lundstedt, O. Shadura, N. Smith and J. Thiltges, arXiv 2203.10161 (18 Mar 2022) [2 citations].

- Analysis Facilities for HL-LHC, D. Benjamin et. al., arXiv 2203.08010 (15 Mar 2022) [3 citations].

- Coffea-casa: an analysis facility prototype, M. Adamec, G. Attebury, K. Bloom, B. Bockelman, C. Lundstedt, O. Shadura and J. Thiltges, EPJ Web Conf. 251 02061 (2021) (02 Mar 2021) [16 citations] [NSF PAR].